4G (also known as beyond 3G), an acronym for Fourth-Generation Communications System, is a term used to describe the next step in wireless communications. A 4G system will be able to provide a comprehensive IP solution where voice, data and streamed multimedia can be given to users on an "Anytime, Anywhere" basis, and at higher data rates than previous generations. There is no formal definition for what 4G is; however, there are certain objectives that are projected for 4G.

These objectives include: that 4G will be a fully IP-based integrated system. This will be achieved after wired and wireless technologies converge and will be capable of providing 100 Mbit/s and 1 Gbit/s speeds both indoors and outdoors, with premium quality and high security. 4G will offer all types of services at an affordable cost.

Objective and Approach

--------------------------------------

Objectives

4G is being developed to accommodate the quality of service (QoS) and rate requirements set by forthcoming applications like wireless broadband access, Multimedia Messaging Service, video chat, mobile TV, High definition TV content, DVB, minimal service like voice and data, and other streaming services for "anytime-anywhere". The 4G working group has defined the following as objectives of the 4G wireless communication standard:

-A spectrally efficient system (in bits/s/Hz and bit/s/Hz/site),

-High network capacity: more simultaneous users per cell,

-A nominal data rate of 100 Mbit/s while the client physically moves at high speeds relative to the station, and 1 Gbit/s while client and station are in relatively fixed positions as defined by the ITU-R,

-A data rate of at least 100 Mbit/s between any two points in the world,

-Smooth handoff across heterogeneous networks,

-Seamless connectivity and global roaming across multiple networks,

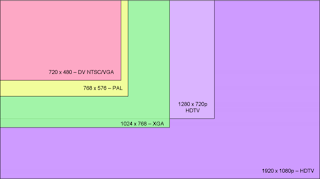

-High quality of service for next generation multimedia support (real time audio, high speed data, HDTV video content, mobile TV, etc)

-Interoperability with existing wireless standards, and

-An all IP, packet switched network.

In summary, the 4G system should dynamically share and utilise network resources to meet the minimal requirements of all the 4G enabled users.

Approaches

As described in 4G consortia including WINNER, WINNER - Towards Ubiquitous Wireless Access, and WWRF, a key technology based approach is summarized as follows, where Wireless-World-Initiative-New-Radio (WINNER) is a consortium to enhance mobile communication systems.

Consideration points

-Coverage, radio environment, spectrum, services, business models and deployment types, users

Principal technologies

-Baseband techniques

--OFDM: To explot the frequency selective channel property

--MIMO: To attain ultra high spectral efficiency

--Turbo principle: To minimize the required SNR at the reception side

-Adaptive radio interface

-Modulation, MIMO spatial processing including multi-antenna and multi-user MIMO, relaying including fixed relay networks (FRNs) and the cooperative relaying concept, multi-mode protocol

It introduces a single new ubiquitous radio access system concept, which is flexible to a variety levels of beyond 3G wireless systems.

Wireless System Evolution

--------------------------------------

First generation: Almost all of the systems from this generation were analog systems where voice was considered to be the main traffic. These systems could often be listened to by third parties. some of the standards are NMT, AMPS, Hicap, CDPD, Mobitex, DataTac

Second generation: All the standards belonging to this generation are commercial centric and they are digital in form. Around 60% of the current market is dominated by European standards. The second generation standards are GSM, iDEN, D-AMPS, IS-95, PDC, CSD, PHS, GPRS, HSCSD, and WiDEN.

Third generation: To meet the growing demands in the number of subscribers (increase in network capacity), rates required for high speed data transfer and multimedia applications, 3G standards started evolving. The systems in this standard are basically a linear enhancement of 2G systems. They are based on two parallel backbone infrastructures, one consisting of circuit switched nodes, and one of packet oriented nodes. The ITU defines a specific set of air interface technologies as third generation, as part of the IMT-2000 initiative. Currently, transition is happening from 2G to 3G systems. As a part of this transition, lot of technologies are being standardized. From 2G to 3G: 2.75G - EDGE and EGPRS, 3G - CDMA 2000,W-CDMA or UMTS (3GSM), FOMA, 1xEV-DO/IS-856, TD-SCDMA, GAN/UMA. Similarly from 3G to 4G: 3.5G - HSDPA, HSUPA, Super3G - HSOPA/LTE

Fourth generation: According to the 4G working groups, the infrastructure and the terminals of 4G will have almost all the standards from 2G to 4G implemented. Even though the legacy systems are in place to be adopted in 4G for the existing legacy users, going forward the infrastructure will however only be packet based, all-IP. Also, some proposals suggest having an open platform where the new innovations and evolutions can fit. The technologies which are being considered as pre-4G are used in the following standard version: WiMax, WiBro, 3GPPLong Term Evolution and 3GPP2 Ultra Mobile Broadband.

Components

--------------------------------------

Access schemes

As the wireless standards evolved, the access techniques used also exhibited increase in efficiency, capacity and scalability. The first generation wireless standards used plain TDMA and FDMA. In the wireless channels, TDMA proved to be less efficient in handling the high data rate channels as it requires large guard periods to alleviate the multipath impact. Similarly, FDMA consumed more bandwidth for guard to avoid inter carrier interference. So in second generation systems, one set of standard used the combination of FDMA and TDMA and the other set introduced a new access scheme called CDMA. Usage of CDMA increased the system capacity and also placed a soft limit on it rather than the hard limit. Data rate is also increased as this access scheme is efficient enough to handle the multipath channel. This enabled the third generation systems to used CDMA as the access scheme IS-2000, UMTS, HSXPA, 1xEV-DO, TD-CDMA and TD-SCDMA. The only issue with the CDMA is that it suffers from poor spectrum flexibility and scalability.

Recently, new access schemes like Orthoganal FDMA, Single Carrier FDMA, Interleaved FDMA and Multi-carrier code division multiple access are gaining more importance for the next generation systems. WiMax is using OFDMA in the downlink and in the uplink. For the next generation UMTS, OFDMA is being considered for the downlink. By contrast, IFDMA is being considered for the uplink since OFDMA contributes more to the PAPR related issues and results in nonlinear operation of amplifiers. IFDMA provides less power fluctuation and thus avoids amplifier issues. Similarly, MC-CDMA is in the proposal for the IEEE 802.20 standard. These access schemes offer the same efficiencies as older technologies like CDMA. Apart from this, scalability and higher data rates can be achieved.

The other important advantage of the above mentioned access techniques is that they require less complexity for equalization at the receiver. This is an added advantage especially in the MIMO environments since the spatial multiplexing transmission of MIMO systems inherently requires high complexity equalization at the receiver.

In addition to improvements in these multiplexing systems, improved modulation techniques are being used. Whereas earlier standards largely used Phase-shift keying, more efficient systems such as 64QAM are being proposed for use with the 3GPP Long Term Evolution standards.

IPv6

Unlike 3G, which is based on two parallel infrastructures consisting of circuit switched and packet switched network nodes respectively, 4G will be based on packet switching only. This will require low-latency data transmission.

It is generally believed that 4th generation wireless networks will support a greater number of wireless devices that are directly addressable and routable. Therefore, in the context of 4G, IPv6 is an important network layer technology and standard that can support a large number of wireless-enabled devices. By increasing the number of IP addresses, IPv6 removes the need for Network Address Translation (NAT), a method of sharing a limited number of addresses among a larger group of devices.

In the context of 4G, IPv6 also enables a number of applications with better multicast, security, and route optimization capabilities. With the available address space and number of addressing bits in IPv6, many innovative coding schemes can be developed for 4G devices and applications that could aid deployment of 4G networks and services.

Advanced Antenna Systems

The performance of radio communications obviously depends on the advances of an antenna system, refer to smart or intelligent antenna. Recently, multiple antenna technologies are emerging to achieve the goal of 4G systems such as high rate, high reliability, and long range communications. In the early 90s, to cater the growing data rate needs of data communication, many transmission schemes were proposed. One technology, spatial multiplexing, gained importance for its bandwidth conservation and power efficiency. Spatial multiplexing involves deploying multiple antennas at the transmitter and at the receiver. Independent streams can then be transmitted simultaneously from all the antennas. This increases the data rate into multiple folds with the number equal to minimum of the number of transmit and receive antennas. This is called MIMO (as a branch of intelligent antenna). Apart from this, the reliability in transmitting high speed data in the fading channel can be improved by using more antennas at the transmitter or at the receiver. This is called transmit or receive diversity. Both transmit/receive diversity and transmit spatial multiplexing are categorized into the space-time coding techniques, which does not necessary require the channel knowledge at the transmit. The other category is closed-loop multiple antenna technologies which use the channel knowledge at the transmitter.

Software-Defined Radio (SDR)

SDR is one form of open wireless architecture (OWA). Since 4G is a collection of wireless standards, the final form of a 4G device will constitute various standards. This can be efficiently realized using SDR technology, which is categorized to the area of the radio convergence.

Developments

--------------------------------------

The Japanese company NTT DoCoMo has been testing a 4G communication system prototype with 4x4 MIMO called VSF-OFCDM at 100 Mbit/s while moving, and 1 Gbit/s while stationary. NTT DoCoMo recently reached 5 Gbit/s with 12x12 MIMO while moving at 10 km/h, and is planning on releasing the first commercial network in 2010.

Digiweb, an Irish fixed and wireless broadband company, has announced that they have received a mobile communications license from the Irish Telecoms regulator, ComReg. This service will be issued the mobile code 088 in Ireland and will be used for the provision of 4G Mobile communications.

Pervasive networks are an amorphous and presently entirely hypothetical concept where the user can be simultaneously connected to several wireless access technologies and can seamlessly move between them (See handover, IEEE 802.21). These access technologies can be Wi-Fi, UMTS, EDGE, or any other future access technology. Included in this concept is also smart-radio (also known as cognitive radio technology) to efficiently manage spectrum use and transmission power as well as the use of mesh routing protocols to create a pervasive network.

Sprint plans to launch 4G services in trial markets by the end of 2007 with plans to deploy a network that reaches as many as 100 million people in 2008.... and has announced WiMax service called Xohm. Tested in Chicago, this speed was clocked at 100 Mbit/s.

Verizon Wireless announced on September 20, 2007 that it plans a joint effort with the Vodafone Group to transition its networks to the 4G standard LTE. The time of this transition has yet to be announced.

The German WiMAX operator Deutsche Breitband Dienste (DBD) has launched WiMAX services (DSLonair) in Magdeburg and Dessau. The subscribers are offered a tariff plan costing 9.95 euros per month offering 2 Mbit/s download / 300 kbit/s upload connection speeds and 1.5 GB monthly traffic. The subscribers are also charged a 16.99 euro one-time fee and 69.90 euro for the equipment and installation.DBD received additional national licenses for WiMAX in December 2006 and have already launched the services in Berlin, Leipzig and Dresden.

American WiMAX services provider Clearwire made its debut on Nasdaq in New York on March 8, 2007. The IPO was underwritten by Merrill Lynch, Morgan Stanley and JP Morgan. Clearwire sold 24 million shares at a price of $25 per share. This adds $600 million in cash to Clearwire, and gives the company a market valuation of just over $3.9 billion.

Applications

--------------------------------------

The killer application of 4G is not clear, though the improved bandwidths and data throughput offered by 4G networks should provide opportunities for previously impossible products and services to be released. Perhaps the "killer application" is simply to have mobile always on Internet, no walled garden and reasonable flat rate per month charge. Existing 2.5G/3G/3.5G phone operator based services are often expensive, and limited in application.

Already at rates of 15-30 Mbit/s, 4G should be able to provide users with streaming high-definition television. At rates of 100 Mbit/s, the content of a DVD, for example a movie, can be downloaded within about 5 minutes for offline access.

Pre-4G Wireless Standards

--------------------------------------

According to a Visant Strategies study there will be multiple competitors in this space:

-WiMAX - 7.2 million units by 2010 (May include fixed and mobile)

-Flash-OFDM - 13 million subscribers in 2010 (only Mobile)

-3GPP Long Term Evolution of UMTS in 3GPP - valued at US$2 billion in 2010 (~30% of the world population)

-UMB in 3GPP2

-IEEE 802.20

Fixed WiMax and Mobile WiMax are different systems, as of July 2007, all the deployed WiMax is "Fixed Wireless" and is thus not yet 4G (IMT-advanced) although it can be seen as one of the 4G standards being considered.

Wednesday, October 31, 2007

4G

Posted by

pkm

at

7:12 PM

0

comments

![]()

Labels: technology

Friday, October 19, 2007

CentOS

CentOS is a freely-available Linux distribution that is based on Red Hat's commercial product: Red Hat Enterprise Linux (RHEL). This rebuild project strives to be 100% binary compatible with the upstream product and, within its mainline and updates, not to vary from that goal. Additional software archives hold later versions of such packages, along with other Free and Open Source Software RPM-based packages. CentOS stands for Community ENTerprise Operating System.

RHEL is largely composed of free and open source software, but is made available in a usable, binary form (such as on CD-ROM or DVD-ROM) only to paying subscribers. As required, Red Hat releases all source code for the product publicly under the terms of the GNU General Public License and other licenses. CentOS developers use that source code to create a final product that is very similar to RHEL and freely available for download and use by the public, but not maintained or supported by Red Hat. There are other distributions derived from RHEL's source as well, but they have not attained the surrounding community that CentOS has built; CentOS is generally the one most current with Red Hat's changes.

CentOS' preferred software updating tool is based on yum, although support for use of an up2date variant exists. Each may be used to download and install both additional packages and their dependencies, and also to obtain and apply periodic and special (security) updates from repositories on the CentOS Mirror Network.

CentOS can be used as an X Window System-based desktop but, like RHEL, is targeted primarily at the server market. Some hosting companies rely on CentOS working together with the cPanel Control Panel.

Versioning scheme

-----------------------------------------

-CentOS version numbers have two parts, a major version and a minor version. The major version corresponds to the version of Red Hat Enterprise Linux from which the source packages used to build CentOS are taken. The minor version corresponds to the update set of that Red Hat Enterprise Linux version from which the source packages used to build CentOS are taken. For example, CentOS 4.4 is built from the source packages from Red Hat Enterprise Linux 4 update 4. CentOS refers to the source as "PNAELV" (Prominent North American Enterprise Linux Vendor), which is an acronym referring to Red Hat, coined in response to questions raised by Red Hat's legal counsel in a letter to project members regarding possible trademark issues.

-Since mid-2006, starting with RHEL 4.4 (formerly known as RHEL 4.0 update 4), Red Hat have adopted a versioning convention identical to that of CentOS, e.g., RHEL 4.5 or RHEL 3.9. See this Red Hat Knowledge Base article for more information.

Posted by

pkm

at

2:03 PM

0

comments

![]()

Labels: technology

Monday, October 1, 2007

NTT DoCoMo

NTT DoCoMo, Inc. (株式会社エヌ・ティ・ティ・ドコモ, Kabushiki-gaisha Enutiti Dokomo?, TYO: 9437, NYSE: DCM, LSE: NDCM) is the predominant mobile phone operator in Japan. The name is officially an abbreviation of the phrase Do Communications Over the Mobile Network, and is also a play on dokomo, meaning “everywhere” in Japanese.

NTT DoCoMo, Inc. (株式会社エヌ・ティ・ティ・ドコモ, Kabushiki-gaisha Enutiti Dokomo?, TYO: 9437, NYSE: DCM, LSE: NDCM) is the predominant mobile phone operator in Japan. The name is officially an abbreviation of the phrase Do Communications Over the Mobile Network, and is also a play on dokomo, meaning “everywhere” in Japanese.

DoCoMo was spun off from Nippon Telegraph and Telephone (NTT) in August 1991 to take over the mobile cellular operations. DoCoMo provides 2G (MOVA) PDC cellular services in 800 MHz and 1.5 GHz bands (total 34 MHz bandwidth), and 3G (FOMA) W-CDMA services in the 2 GHz (1945-1960 MHz) band. Its businesses also include PHS (Paldio), paging, and satellite. DoCoMo has announced that its PHS services will be phased out over the next few years.

DoCoMo provides phone, video phone (FOMA and Some PHS), i-mode (internet), and mail (i-mode mail, Short Mail, and SMS) services. Customers

Customers

-----------------------------

NTT DoCoMo is a subsidiary of Japan's incumbent telephone operator NTT. The majority of NTT-DoCoMo's shares are owned by NTT (which is 31% to 55% government-owned). While some NTT shares are publicly traded, control of the company by Japanese interests (Government and civilian) is guaranteed by the number of shares available to buyers. It provides wireless voice and data communications to many subscribers in Japan. NTT DoCoMo is the creator of W-CDMA technology as well as mobile i-mode service.

NTT DoCoMo has more than 50 million customers, which means more than half of Japan’s cellular market. The company provides a wide variety of mobile multimedia services. These include i-mode which provides e-mail and internet access to over 50 million subscribers, and FOMA, launched in 2001 as the world's first 3G mobile service based on W-CDMA.

In addition to wholly owned subsidiaries in Europe and North America, the company is expanding its global reach through strategic alliances with mobile and multimedia service providers in Asia-Pacific and Europe. NTT DoCoMo is listed on the Tokyo (9437), London (NDCM), and New York (DCM) stock exchanges.

Services

-----------------------------------------------------

i-mode

i-mode is NTT DoCoMo’s proprietary mobile internet platform and as of October 2006 boasts 47 million customers in Japan. This excludes overseas users over networks in 15 other countries, as of November 2005, through i-mode licensing agreements with cellular phone operators.

With i-mode, mobile phone users get benefits such as mobile reservations, supporting secure transactions and keeping up to date with the latest information. They're able to get easy access to thousands of Internet sites, as well as specialized services such as e-mail, online shopping, Mobile Banking, ticket reservations, and restaurant reviews. Mobile users can access sites from anywhere in Japan, and at unusually low rates, because their charges are based on the volume of data transmitted, not the airtime. NTT DoCoMo's i-mode network structure not only provides access to i-mode and i-mode-compatible content through the Internet, but also provides access through a dedicated leased-line circuit for added security. Doja the Java environment specification for i-mode adds further functionality.

Takeshi Natsuno won the Wharton Infosys Business Transformation Award in 2002 for his innovative strategical work on i-mode. FOMA

FOMA

DoCoMo was the first mobile operator in the world to commercially roll-out 3G mobile communications. DoCoMo's 3G services are marketed under the brand FOMA. At present (2005) FOMA uses wCDMA technology with a data rate of 384 kbit/s. Since DoCoMo was the first carrier to roll out 3G network technology, DoCoMo used technologies different from the European UMTS standards, which were not ready early enough for DoCoMo's roll-out. Recently DoCoMo is working to modify FOMA to conform fully with UMTS standards over time.

HSDPA

DoCoMo is working to upgrade the data rates towards 14.4 Mbit/s using HSDPA. Up to 3.6 Mbit/s per cell in the downlink service has launched in August 2005

Ownership

-----------------------------------------------------

NTT DoCoMo's shares are publicly traded on several stock exchanges, with the major shareholder (over 55%) being Japan's incumbent operator NTT. NTT is also a publicly traded corporation and the majority share holder is the Government of Japan.

R&D

-----------------------------------------------------

While most mobile operators globally do not perform any significant R&D and rely on equipment suppliers for the development and supply of new communication equipment, NTT DoCoMo continues the NTT tradition of very extensive R&D efforts. It was mainly DoCoMo's strong R&D investments which allowed DoCoMo to introduce 3G communications and i-mode data services long before such services were introduced anywhere else in the world.

DoCoMo's investments outside Japan

-----------------------------------------------------

NTT DoCoMo has a wide range of foreign investments. However, NTT DoCoMo was not successful in investing in foreign carriers. DoCoMo had invested very large multi-billion dollar amounts in KPN, Hutchison Telecom (included 3, Hutch, etc.), KTF, AT&T Wireless, and had to write-off or sell all these investments in foreign carriers. As a result, DoCoMo booked a total of about US$ 10 billion in losses, while during the same time DoCoMo's Japan operations were profitable.

Access DoCoMo outside Japan

-----------------------------------------------------

DoCoMo/Vodafone PDC phones

PDC phones do not work in foreign countries. PDC is deployed only in Japan.

Most DoCoMo "FOMA" phones (except roaming-ready models) and Vodafone Japan 802N, 703N and 905SH

These are W-CDMA-only phones, so they cannot be used with GSM networks. Also note that they cannot be used with a foreign operator's subscription because they do not accept other operators' SIM cards.

Vodafone 3G phones (except 802N, 703N and 905SH) and DoCoMo "FOMA" roaming-ready phones These phones will work with foreign GSM networks (with the exception of the 903i and 904i series which only have WCDMA roaming capability). However, they cannot be used with a foreign operator's subscription until they are unlocked. This can be done for the N900iG, the M1000, and most of the Vodafone-branded 3G phones (except the 904SH and the 705SH).

KDDI phones can not be used outside Japan, with the exception of the Global Passport models. Those cannot be used locally on non-Japanese networks, however, because of the use of a non-standard ESN (5 letters followed by 6 numbers).

Much like U.S. CDMA phones, Japanese phones are designed for their respective operators (except recent Vodafone 3G phones) and will not work with a foreign operator's subscription. In other words, the fact that a Japanese phone is technically capable of working in a foreign country does not mean it can be used with a local operator's service out of the box.

Posted by

pkm

at

1:45 AM

0

comments

![]()

Labels: tech design, wireless

Sunday, September 16, 2007

High-Definition Multimedia Interface (HDMI)

The High-Definition Multimedia Interface (HDMI) is a licensable audio/video connector interface for transmitting uncompressed, encrypted digital streams. HDMI connects DRM-enforcing digital audio/video sources, such as a set-top box, a Blu-ray Disc player, a PC running Windows Vista, a video game console, or an AV receiver, to a compatible digital audio device and/or video monitor, such as a digital television (DTV). HDMI began to appear in 2006 on prosumer HDTV camcorders and high-end digital still cameras.

It represents the DRM alternative to consumer analog standards such as RF (coaxial cable), composite video, S-Video, SCART, component video and VGA, and digital standards such as DVI (DVI-D and DVI-I).

General notes

--------------------------------------------

HDMI supports any TV or PC video format, including standard, enhanced, or high-definition video, plus multi-channel digital audio on a single cable. It is independent of the various DTV standards such as ATSC, and DVB (-T,-S,-C), as these are encapsulations of the MPEG movie data streams, which are passed off to a decoder, and output as uncompressed video data on HDMI. HDMI encodes the video data into TMDS for transmission digitally over HDMI.

Devices are manufactured to adhere to various versions of the specification, where each version is given a number, such as 1.0 or 1.3. Each subsequent version of the specification uses the same cables, but increases the throughput and/or capabilities of what can be transmitted over the cable. For example, previously, the maximum pixel clock rate of the interface was 165 MHz, sufficient for supporting 1080p at 60 Hz or WUXGA (1920x1200), but HDMI 1.3 increased that to 340 MHz, providing support for WQXGA (2560x1600) and beyond across a single digital link. See also: HDMI Versions.

HDMI also includes support for 8-channel uncompressed digital audio at 192 kHz sample rate with 24 bits/sample as well as any compressed stream such as Dolby Digital, or DTS. HDMI supports up to 8 channels of one-bit audio, such as that used on Super Audio CDs at rates up to 4x that used by Super Audio CD. With version 1.3, HDMI now also supports lossless compressed streams such as Dolby TrueHD and DTS-HD Master Audio.

HDMI is backward-compatible with the single-link Digital Visual Interface carrying digital video (DVI-D or DVI-I, but not DVI-A) used on modern computer monitors and graphics cards. This means that a DVI-D source can drive an HDMI monitor, or vice versa, by means of a suitable adapter or cable, but the audio and remote control features of HDMI will not be available. Additionally, without support for High-bandwidth Digital Content Protection (HDCP) on the display, the signal source may prevent the end user from viewing or recording certain restricted content.

In the U.S., HDCP-support is a standard feature on digital TVs with built-in digital (ATSC) tuners, (it does not feature on the cheapest digital TVs, as they lack HDMI altogether). Among the PC-display industry, where computer displays rarely contain built-in tuners, HDCP support is absent from many models. For example, the first LCD monitors with HDMI connectors did not support HDCP, and few compact-LCD monitors (17" or smaller) support HDCP.

The HDMI Founders include consumer electronics manufacturers Hitachi, Matsushita Electric Industrial (Panasonic/National/Quasar), Philips, Sony, Thomson (RCA), Toshiba, and Silicon Image. Digital Content Protection, LLC (a subsidiary of Intel) is providing HDCP for HDMI. In addition, HDMI has the support of major motion picture producers Fox, Universal, Warner Bros., and Disney, and system operators DirecTV and EchoStar (Dish Network) as well as CableLabs and Samsung.

Specifications

--------------------------------------------

HDMI defines the protocol and electrical specifications for the signaling, as well as the pin-out, electrical and mechanical requirements of the cable and connectors.

Connectors

The HDMI Specification has expanded to include three connectors, each intended for different markets.

The standard Type A HDMI connector has 19 pins, with bandwidth to support all SDTV, EDTV and HDTV modes and more. The plug outside dimensions are 13.9 mm wide by 4.45 mm high. Type A is electrically compatible with single-link DVI-D.

A higher resolution version called Type B is defined in HDMI 1.0. Type B has 29 pins (21.2 mm wide), allowing it to carry an expanded video channel for use with very high-resolution future displays, such as WQSXGA (3200x2048). Type B is electrically compatible with dual-link DVI-D, but is not in general use.

The Type C mini-connector is intended for portable devices. It is smaller than Type A (10.42 mm by 2.42 mm) but has the same 19-pin configuration.

Cable

The HDMI cable can be used to carry video, audio, and/or device-controlling signals (CEC). Adaptor cables, from Type A to Type C, are available.

TMDS channel

The Transition Minimized Differential Signaling (TMDS) channel:

-Carries video, audio, and auxiliary data via one of three modes called the Video Data Period, the Data Island Period, and the Control Period. During the Video Data Period, the pixels of an active video line are transmitted. During the Data Island period (which occurs during the horizontal and vertical blanking intervals), audio and auxiliary data are transmitted within a series of packets. The Control Period occurs between Video and Data Island periods.

-Signaling method: Formerly according to DVI 1.0 spec. Single-link (Type A HDMI) or dual-link (Type B HDMI).

-Video pixel rate: 25 MHz to 340 MHz (Type A, as of 1.3) or to 680 MHz (Type B). Video formats with rates below 25 MHz (e.g. 13.5 MHz for 480i/NTSC) transmitted using a pixel-repetition scheme. From 24 to 48 bits per pixel can be transferred, regardless of rate. Supports 1080p at rates up to 120 Hz and WQSXGA.

-Pixel encodings: RGB 4:4:4, YCbCr 4:4:4 (8–16 bits per component); YCbCr 4:2:2 (12 bits per component)

-Audio sample rates: 32 kHz, 44.1 kHz, 48 kHz, 88.2 kHz, 96 kHz, 176.4 kHz, 192 kHz.

-Audio channels: up to 8.

-Audio streams: any IEC61937-compliant stream, including high bitrate (lossless) streams (Dolby TrueHD, DTS-HD Master Audio).

Consumer Electronics Control channel

The Consumer Electronics Control (CEC) channel is optional to implement, but wiring is mandatory. The channel:

-Uses the industry standard AV Link protocol.

-Used for remote control functions.

-One-wire bidirectional serial bus.

-Defined in HDMI Specification 1.0, updated in HDMI 1.2a, and again in 1.3a (added timer and audio commands).

This feature is used in two ways:

-To allow the user to command and control multiple CEC-enabled boxes with one remote control, and

-To allow individual CEC-enabled boxes to command and control each other, without user intervention.

An example of the latter is to allow the DVD player, when the drawer closes with a disk, to command the TV and the intervening A/V Receiver (all with CEC) to power-up, select the appropriate HDMI ports, and auto-negotiate the proper video mode and audio mode. No remote control command is needed. Similarly, this type of equipment can be programmed to return to sleep mode when the movie ends, perhaps by checking the real-time clock. For example, if it is later than 11:00 p.m., and the user does not specifically command the systems with the remote control, then the systems all turn off at the command from the DVD player.

Alternative names for CEC are Anynet (Samsung), BRAVIA Theatre Sync (Sony), Regza Link (Toshiba), RIHD (Onkyo) and Viera Link/EZ-Sync (Panasonic/JVC).

Content protection

-According to High-bandwidth Digital Content Protection (HDCP) Specification 1.2.

-Beginning with HDMI CTS 1.3a, any system which implements HDCP must do so in a fully-compliant manner. HDCP compliance is itself part of the requirements for HDMI compliance.

-The HDMI repeater bit, technically the HDCP repeater bit, controls the authentication and switching/distribution of an HDMI signal.

Versions

--------------------------------------------

Devices are manufactured to adhere to various versions of the specification, where each version is given a revision number. Each subsequent version of the specification uses the same cables, but increases the throughput and capabilities of what can be transmitted over that cable. The need for a new HDMI cable if you already have one really depends on the cable (which also has a HDMI rating). The main thing to consider is if any current cable would be able to handle the increased bandwidth—for example the 10.2 Gbit/s that comes with version 1.3. Cable compliance testing is included in the HDMI Compliance Test Specification (see TESTID 5-3), with "Category 1" and "Category 2" defined in the HDMI Specification 1.3a (Section 4.2.6).

A product listed as having an HDMI version does not necessarily mean that it will have all of the features listed under the version classification, indeed some of the features are optional. For example in HDMI v1.3 it is optional to support the xvYCC wide color standard. This means if you have bought a camcorder that supports the wide color space (which for example is branded by Sony as "x.v.Color") you have to specifically check that the display supports both HDMI v1.3 and the xvYCC wide color standard.

HDMI 1.0

Released December 2002.

-Single-cable digital audio/video connection with a maximum bitrate of 4.9 Gbit/s. Supports up to 165 Mpixel/s video (1080p60 Hz or UXGA) and 8-channel/192 kHz/24-bit audio.

HDMI 1.1

Released May 2004.

-Added support for DVD Audio.

HDMI 1.2

Released August 2005.

-Added support for One Bit Audio, used on Super Audio CDs, up to 8 channels.

-Availability of HDMI Type A connector for PC sources.

-Ability for PC sources to use native RGB color-space while retaining the option to support the YCbCr CE color space.

-Requirement for HDMI 1.2 and later displays to support low-voltage sources.

HDMI 1.2a

Released December 2005.

-Fully specifies Consumer Electronic Control (CEC) features, command sets, and CEC compliance tests.

HDMI 1.3

Released 22 June 2006.

-Increases single-link bandwidth to 340 MHz (10.2 Gbit/s)

-Optionally supports 30-bit, 36-bit, and 48-bit xvYCC with Deep Color or over one billion colors, up from 24-bit sRGB or YCbCr in previous versions.

-Incorporates automatic audio syncing (Audio video sync) capability.

-Optionally supports output of Dolby TrueHD and DTS-HD Master Audio streams for external decoding by AV receivers. TrueHD and DTS-HD are lossless audio codec formats used on HD DVDs and Blu-ray Discs. If the disc player can decode these streams into uncompressed audio, then HDMI 1.3 is not necessary, as all versions of HDMI can transport uncompressed audio.

-Availability of a new mini connector for devices such as camcorders.

HDMI 1.3a

Released 10 November 2006.

-Cable and Sink modifications for Type C

-Source termination recommendation

-Removed undershoot and maximum rise/fall time limits.

-CEC capacitance limits changed

-RGB video quantization range clarification

-CEC commands for timer control brought back in an altered form, audio control commands added.

-Concurrently released compliance test specification included.

HDMI 1.3b

Testing specification released 26 March 2007.

Cable length

--------------------------------------------

The HDMI specification does not define a maximum cable length. As with all cables, signal attenuation becomes too high at a certain length. Instead, HDMI specifies a minimum performance standard. Any cable meeting that specification is compliant. Different construction quality and materials will enable cables of different lengths. In addition, higher performance requirements must be met to support video formats with higher resolutions and/or frame rates than the standard HDTV formats.

The signal attenuation and intersymbol interference caused by the cables can be compensated by using Adaptive Equalization.

HDMI 1.3 defined two categories of cables: Category 1 (standard or HDTV) and Category 2 (high-speed or greater than HDTV) to reduce the confusion about which cables support which video formats. Using 28 AWG, a cable of about 5 metres (~16 ft) can be manufactured easily and inexpensively to Category 1 specifications. Higher-quality construction (24 AWG, tighter construction tolerances, etc.) can reach lengths of 12 to 15 metres (~39 to 49 ft). In addition, active cables (fiber optic or dual Cat-5 cables instead of standard copper) can be used to extend HDMI to 100 metres or more. Some companies also offer amplifiers, equalizers and repeaters that can string several standard (non-active) HDMI cables together.

HDMI and high-definition optical media players

--------------------------------------------

Both introduced in 2006, Blu-ray Disc and HD DVD offer new high-fidelity audio features that require HDMI for best results. Dolby Digital Plus (DD+), Dolby TrueHD and DTS-HD Master Audio use bitrates exceeding TOSLINK's capacity. HDMI 1.3 can transport DD+, TrueHD, and DTS-HD bitstreams in compressed form. This capability would allow a preprocessor or audio/video receiver with the necessary decoder to decode the data itself, but has limited usefulness for HD DVD and Blu-ray.

HD DVD and Blu-ray permit "interactive audio", where the disc-content tells the player to mix multiple audio sources together, before final output. Consequently, most players will handle audio-decoding internally, and simply output LPCM audio all the time. Multichannel LPCM can be transported over an HDMI 1.1 (or higher) connection. As long as the audio/video receiver (or preprocessor) supports multi-channel LPCM audio over HDMI, and supports HDCP, the audio reproduction is equal in resolution to HDMI 1.3. However, many of the cheapest AV receivers do not support audio over HDMI and are often labeled as "HDMI passthrough" devices.

Note that all of the features of an HDMI version may not be implemented in products adhering to that version since certain features of HDMI, such as Deep Color and xvYCC support, are optional.

Criticism

--------------------------------------------

Among manufacturers, the HDMI specification has been criticized as lacking in functional usefulness. The public specification devotes many pages to the lower-level protocol layers (physical, electrical, logical); there is inadequate documentation for the system framework. HDMI-peripherals include audio/video sources, audio-only receivers, audio-video receivers, video-only receivers, repeaters (which have more downstream ports than upstream ports), and switchers (which have more upstream ports than downstream ports). The specification stops short of offering examples of system behavior involving multiple HDMI-devices, leaving implementation to the product engineer's interpretation. Even between devices which use chips from Silicon Image (a promoter and supplier of HDMI IP and silicon), interoperability is not assured. The industry is working to improve through plugfest events (i.e. manufacturer conferences) and more comprehensive design-validation services.

Another criticism of HDMI is that the connectors are not as robust as previous display connectors. Currently most devices with HDMI capability are utilizing surface-mount connectors rather than through-hole or reinforced connectors, making them more susceptible to damage from exterior forces. Tripping over a cable plugged into an HDMI port can easily cause damage to that port.

Closed captioning problems

According to the HDMI Specification, all video timings carried across the link for standard video modes (such as 720p, 1080i, etc.) must have horizontal and video timings matching those defined in the CEA-861D Specification. Since those definitions allow only for the visual portion of the frame (or field, for interlaced video modes), there is no line transmitted for closed captions. Line 21 is not part of the transmitted data as it is in analog modes. For HDMI it is but one of the non-data lines in the vertical blanking interval.

Although an HDMI display is allowed to define a 'native mode' for video, which could expand the active line count to encompass Line 21, most MPEG decoders cannot format a digital video stream to include extra lines—they send only vertical blanking. Even if it were possible, the closed captioning character codes would have to be encoded in some way into the pixel values in Line 21. This would then require the receiver logic in the display to decode those codes and construct the captions.

It is possible, although not standardized, that some measure of content in text form can be transmitted from Source to Sink using CEC commands, or using InfoFrame packets. Again, as there is no standardized format for such data it would likely work only between a source and sink system from the same manufacturer. Such uniqueness goes against the standardization mission of HDMI, which is focused in part on interoperability.

Of course, it is possible that a future enhancement of the HDMI Specification may encompass closed caption transport.

From Wikipedia, the free encyclopedia

Posted by

pkm

at

4:57 PM

0

comments

![]()

Labels: tech design, technology

Thursday, September 13, 2007

Multiprotocol Label Switching (MPLS)

In computer networking and telecommunications, Multi Protocol Label Switching (MPLS) is a data-carrying mechanism that belongs to the family of packet-switched networks. MPLS operates at an OSI Model layer that is generally considered to lie between traditional definitions of Layer 2 (data link layer) and Layer 3 (network layer), and thus is often referred to as a "Layer 2.5" protocol. It was designed to provide a unified data-carrying service for both circuit-based clients and packet-switching clients which provide a datagram service model. It can be used to carry many different kinds of traffic, including IP packets, as well as native ATM, SONET, and Ethernet frames.

Background

---------------------------------------

A number of different technologies were previously deployed with essentially identical goals, such as frame relay and ATM. MPLS is now replacing these technologies in the marketplace, mostly because it is better aligned with current and future technology needs.

In particular, MPLS dispenses with the cell-switching and signaling-protocol baggage of ATM. MPLS recognizes that small ATM cells are not needed in the core of modern networks, since modern optical networks (as of 2001) are so fast (at 10 Gbit/s and well beyond) that even full-length 1500 byte packets do not incur significant real-time queuing delays (the need to reduce such delays, to support voice traffic, having been the motivation for the cell nature of ATM).

At the same time, it attempts to preserve the traffic engineering and out-of-band control that made frame relay and ATM attractive for deploying large-scale networks.

MPLS was originally proposed by a group of engineers from Ipsilon_Networks, but their "IP Switching" technology, which was defined only to work over ATM, did not achieve market dominance. Cisco Systems, Inc. introduced a related proposal, not restricted to ATM transmission, called "Tag Switching" when it was a Cisco proprietary proposal, and was renamed "Label Switching" when it was handed over to the IETF for open standardization. The IETF work involved proposals from other vendors, and development of a consensus protocol that combined features from several vendors' work.

One original motivation was to allow the creation of simple high-speed switches, since for a significant length of time it was impossible to forward IP packets entirely in hardware. However, advances in VLSI have made such devices possible. Therefore the advantages of MPLS primarily revolve around the ability to support multiple service models and perform traffic management. MPLS also offers a robust recovery framework that goes beyond the simple protection rings of synchronous optical networking (SONET/SDH).

While the traffic management benefits of migrating to MPLS are quite valuable (better reliability, increased performance), there is a significant loss of visibility and access into the MPLS cloud for IT departments.

How MPLS works

---------------------------------------

MPLS works by preappending packets with an MPLS header, containing one or more 'labels'. This is called a label stack.

Each label stack entry contains four fields:

-a 20-bit label value.

-a 3-bit field for QoS priority (experimental).

-a 1-bit bottom of stack flag. If this is set, it signifies the current label is the last in the stack.

-an 8-bit TTL (time to live) field.

These MPLS labeled packets are switched after a Label Lookup/Switch instead of a lookup into the IP table. As mentioned above, when MPLS was conceived, Label Lookup and Label Switching was faster than a RIB lookup because it could take place directly within the switched fabric and not the CPU.

The entry and exit points of an MPLS network are called Label Edge Routers (LER), which, respectively, push an MPLS label onto the incoming packet and pop it off the outgoing packet. Routers that perform routing based only on the label are called Label Switch Routers (LSR). In some applications, the packet presented to the LER already may have a label, so that the new LSR pushes a second label onto the packet. For more information see Penultimate Hop Popping.

In the specific context of a MPLS based Virtual Private Network (VPN), LSRs that function as ingress and/or egress routers to the VPN. are often called PE (Provider Edge) routers. Devices that function only as transit routers are similarly called P (Provider) routers. See RFC2547. The job of a P router is significantly easier than that of a PE router, so they can be less complex and may be more dependable because of this.

When an unlabeled packet enters the ingress router and needs to be passed on to an MPLS tunnel, the router first determines the forwarding equivalence class the packet should be in, and then inserts one or more labels in the packet's newly created MPLS header. The packet is then passed on to the next hop router for this tunnel.

When a labeled packet is received by an MPLS router, the topmost label is examined. Based on the contents of the label a swap, push (impose) or pop (dispose) operation can be performed on the packet's label stack. Routers can have prebuilt lookup tables that tell them which kind of operation to do based on the topmost label of the incoming packet so they can process the packet very quickly. In a swap operation the label is swapped with a new label, and the packet is forwarded along the path associated with the new label.

In a push operation a new label is pushed on top of the existing label, effectively "encapsulating" the packet in another layer of MPLS. This allows the hierarchical routing of MPLS packets. Notably, this is used by MPLS VPNs.

In a pop operation the label is removed from the packet, which may reveal an inner label below. This process is called "decapsulation". If the popped label was the last on the label stack, the packet "leaves" the MPLS tunnel. This is usually done by the egress router, but see PHP below.

During these operations, the contents of the packet below the MPLS Label stack are not examined. Indeed transit routers typically need only to examine the topmost label on the stack. The forwarding of the packet is done based on the contents of the labels, which allows "protocol independent packet forwarding" that does not need to look at a protocol-dependent routing table and avoids the expensive IP longest prefix match at each hop.

At the egress router, when the last label has been popped, only the payload remains. This can be an IP packet, or any of a number of other kinds of payload packet. The egress router must therefore have routing information for the packet's payload, since it must forward it without the help of label lookup tables. An MPLS transit router has no such requirement.

In some special cases, the last label can also be popped off at the penultimate hop (the hop before the egress router). This is called Penultimate Hop Popping (PHP). This may be interesting in cases where the egress router has lots of packets leaving MPLS tunnels, and thus spends inordinate amounts of CPU time on this. By using PHP, transit routers connected directly to this egress router effectively offload it, by popping the last label themselves.

MPLS can make use of existing ATM network infrastructure, as its labeled flows can be mapped to ATM virtual circuit identifiers, and vice-versa.

Installing and removing MPLS paths

---------------------------------------

There are two standardized protocols for managing MPLS paths: CR-LDP (Constraint-based Routing Label Distribution Protocol) and RSVP-TE, an extension of the RSVP protocol for traffic engineering. Also an extension of BGP protocol can be used to manage MPLS path.

An MPLS header does not identify the type of data carried inside the MPLS path. If one wants to carry two different types of traffic between the same two routers, with different treatment from the core routers for each type, one has to establish a separate MPLS path for each type of traffic.

Comparison of MPLS versus IP

---------------------------------------

MPLS cannot be compared to IP as a separate entity because it works in conjunction with IP and IP's IGP routing protocols. MPLS gives IP networks simple traffic engineering, the ability to transport Layer 3 (IP) VPNs with overlapping address spaces, and support for Layer 2 pseudo wires (with Any Transport Over MPLS, or ATOM - see Martini draft). Routers with programmable CPUs and without TCAM/CAM or another method for fast lookups may also see a limited increase in the performance.

MPLS relies on IGP routing protocols to construct its label forwarding table, and the scope of any IGP is usually restricted to a single carrier for stability and policy reasons. As there is still no standard for carrier-carrier MPLS it is not possible to have the same MPLS service (Layer2 or Layer3 VPN) covering more than one operator.

MPLS local protection

In the event of a network element failure when recovery mechanisms are employed at the IP layer, restoration may take several seconds which is unacceptable for real-time applications (such as VoIP). In contrast, MPLS local protection meets the requirements of real-time applications with recovery times comparable to those of SONET rings (up to 50ms).

Comparison of MPLS versus ATM

---------------------------------------

While the underlying protocols and technologies are different, both MPLS and ATM provide a connection-oriented service for transporting data across computer networks. In both technologies connections are signaled between endpoints, connection state is maintained at each node in the path and encapsulation techniques are used to carry data across the connection. Excluding differences in the signaling protocols (RSVP/LDP for MPLS and PNNI for ATM) there still remain significant differences in the behavior of the technologies.

The most significant difference is in the transport and encapsulation methods. MPLS is able to work with variable length packets while ATM transports fixed-length (53 byte) cells. Packets must be segmented, transported and re-assembled over an ATM network using an adaption layer, which adds significant complexity and overhead to the data stream. MPLS, on the other hand, simply adds a label to the head of each packet and transmits it on the network.

Differences exist, as well, in the nature of the connections. An MPLS connection (LSP) is uni-directional - allowing data to flow in only one direction between two endpoints. Establishing two-way communications between endpoints requires a pair of LSPs to be established. Because 2 LSPs are required for connectivity, data flowing in the forward direction may use a different path from data flowing in the reverse direction. ATM point-to-point connections (Virtual Circuits), on the other hand, are bi-directional, allowing data to flow in both directions over the same path (bi-directional are only svc ATM connections; pvc ATM connections are uni-directional).

Both ATM and MPLS support tunnelling of connections inside connections. MPLS uses label stacking to accomplish this while ATM uses Virtual Paths. MPLS can stack multiple labels to form tunnels within tunnels. The ATM Virtual Path Indicator (VPI) and Virtual Circuit Indicator (VCI) are both carried together in the cell header, limiting ATM to a single level of tunnelling.

The biggest single advantage that MPLS has over ATM is that it was designed from the start to be complementary to IP. Modern routers are able to support both MPLS and IP natively across a common interface allowing network operators great flexibility in network design and operation. ATM's incompatibilities with IP require complex adaptation making it largely unsuitable in today's predominantly IP networks.

MPLS deployment

---------------------------------------

MPLS is currently in use in large "IP Only" networks, and is standardized by IETF in RFC 3031.

In practice, MPLS is mainly used to forward IP datagrams and Ethernet traffic. Major applications of MPLS are Telecommunications traffic engineering and MPLS VPN.

Competitors to MPLS

---------------------------------------

MPLS can exist in both IPv4 environment (IPv4 routing protocols) and IPv6 environment (IPv6 routing protocols). The major goal of MPLS development - the increase of routing speed - is no longer relevant because of the usage of ASIC, TCAM and CAM based switching. Therefore the major usage of MPLS is to implement limited traffic engineering and Layer 3/Layer 2 “service provider type” VPNs over existing IPv4 networks. The only competitors to MPLS are technologies like L2TPv3 that also provide services such as service provider Layer 2 and Layer 3 VPNs.

IEEE 1355 is a completely unrelated technology that does something similar in hardware.

IPv6 references: Grosetete, Patrick, IPv6 over MPLS, Cisco Systems 2001; Juniper Networks IPv6 and Infranets White Paper; Juniper Networks DoD's Research and Engineering Community White Paper.

From Wikipedia, the free encyclopedia

Posted by

pkm

at

3:36 PM

2

comments

![]()

Labels: tech design, technology

Thaicom 4 (IPSTAR)

Thaicom 4, also known as IPSTAR, is a broadband satellite built by Space Systems/Loral (SS/L) for Shin Satellite and was the heaviest commercial satellite launched as of August 2005. It was launched on August 11, 2005 from the European Space Agency's spaceport in French Guiana onboard the Ariane rocket. The satellite had a launch mass of 6486 kilograms. Thaicom 4 is from SS/L’s LS-1300 line of spacecraft.

The IPSTAR broadband satellite was designed for high-speed, 2-way broadband communication over an IP platform and is to play an important role in the broadband Internet/multimedia revolution and the convergence of information and communication technologies.

The satellite's 45 Gbit/s bandwidth capacity, in combination with its platform’s ability to provide an immediately available, high-capacity ground network with affordable bandwidth, allows for rapid deployment and flexible service locations within its footprint.

The IPSTAR system is comprised of a gateway earth station communicating over the IPSTAR satellite to provide broadband packet-switched communications to a large number of small terminals with network star configuration.

A wide-band data link from the gateway to the user terminal employs an Orthogonal Frequency Division Multiplexing (OFDM) with a Time Division Multiplex (TDM) overlay. These forward channels employ highly efficient transmission methods, including Turbo Product Code (TPC) and higher order modulation (L-codes) for increased system performance.

In the terminal-to-gateway direction (or return link), the narrow-band channels employ the same efficient transmission methods. These narrow-band channels operate in different multiple-access modes based on bandwidth-usage behavior, including Slotted-ALOHA, ALOHA, and TDMA for STAR return link waveform.

Spot Beam Coverage

Traditional satellite technology utilizes a broad single beam to cover entire continents and regions. With the introduction of multiple narrowly focused spot beams and frequency reuse, IPSTAR is capable of maximizing the available frequency for transmissions. Increasing bandwidth by a factor of twenty compared to traditional Ku-band satellites translates into better efficiencies. Despite the higher costs associated with spot beam technology, the overall cost per circuit is considerably lower as compared to shaped beam technology.

Dynamic Power Allocation

IPSTAR's Dynamic Power Allocation optimizes the use of power among beams and allocates a power reserve of 20 percent to be allocated to beams that may be affected by rain fade, thus maintaining the link.

From Wikipedia, the free encyclopedia

Posted by

pkm

at

3:30 PM

0

comments

![]()

Labels: hardware, technology

Sunday, September 9, 2007

Storage area network (SAN)

In computing, a storage area network (SAN) is an architecture to attach remote computer storage devices (such as disk arrays, tape libraries and optical jukeboxes) to servers in such a way that, to the operating system, the devices appear as locally attached. Although cost and complexity is dropping, as of 2007, SANs are still uncommon outside larger enterprises.

By contrast to a SAN, network-attached storage (NAS) uses file-based protocols such as NFS or SMB/CIFS where it is clear that the storage is remote, and computers request a portion of an abstract file rather than a disk block.

Network types

----------------------------------------------

Most storage networks use the SCSI protocol for communication between servers and disk drive devices. However, they do not use SCSI low-level physical interface (e.g. cables), as its bus topology is unsuitable for networking. To form a network, a mapping layer is used to other low-level protocols:

-Fibre Channel Protocol (FCP), mapping SCSI over Fibre Channel. Currently the most common. Comes in 1 Gbit/s, 2 Gbit/s, 4 Gbit/s, 8 Gbit/s, 10 Gbit/s variants.

-iSCSI, mapping SCSI over TCP/IP.

-HyperSCSI, mapping SCSI over Ethernet.

-FICON mapping over Fibre Channel (used by mainframe computers).

-ATA over Ethernet, mapping ATA over Ethernet.

-SCSI and/or TCP/IP mapping over InfiniBand (IB).

Storage sharing

---------------------------------------------- The driving force for the SAN market is rapid growth of highly transactional data that require high speed, block-level access to the hard drives (such as data from email servers, databases, and high usage file servers). Historically, enterprises were first creating "islands" of high performance SCSI disk arrays. Each island was dedicated to a different application and visible as a number of "virtual hard drives" (or LUNs).

The driving force for the SAN market is rapid growth of highly transactional data that require high speed, block-level access to the hard drives (such as data from email servers, databases, and high usage file servers). Historically, enterprises were first creating "islands" of high performance SCSI disk arrays. Each island was dedicated to a different application and visible as a number of "virtual hard drives" (or LUNs).

SAN essentially enables connecting those storage islands using a high-speed network.

However, an operating system still sees SAN as a collection of LUNs and is supposed to maintain its own file systems on them. Still, the most reliable and most widely used are the local file systems, which cannot be shared among multiple hosts. If two independent local file systems resided on a shared LUN, they would be unaware of the fact, would have no means of cache synchronization and eventually would corrupt each other. Thus, sharing data between computers through a SAN requires advanced solutions, such as SAN file systems or clustered computing.

Despite such issues, SANs help to increase storage capacity utilization, since multiple servers share the same growth reserve on disk arrays.

In contrast, NAS allows many computers to access the same file system over the network and synchronizes their accesses. Lately, the introduction of NAS heads allowed easy conversion of SAN storage to NAS.

Benefits

----------------------------------------------

Sharing storage usually simplifies storage administration and adds flexibility since cables and storage devices do not have to be physically moved to move storage from one server to another.

Other benefits include the ability to allow servers to boot from the SAN itself. This allows for a quick and easy replacement of faulty servers since the SAN can be reconfigured so that a replacement server can use the LUN of the faulty server. This process can take as little as half an hour and is a relatively new idea being pioneered in newer data centers. There are a number of emerging products designed to facilitate and speed up this process still further. For example, Brocade offers an Application Resource Manager product which automatically provisions servers to boot off a SAN, with typical-case load times measured in minutes. While this area of technology is still new, many view it as being the future of the enterprise datacenter.

SANs also tend to enable more effective disaster recovery processes. A SAN could span a distant location containing a secondary storage array. This enables storage replication either implemented by disk array controllers, by server software, or by specialized SAN devices. Since IP WANs are often least costly method of long-distance transport, the Fibre Channel over IP (FCIP) and iSCSI protocols have been developed to allow SAN extension over IP networks. The traditional physical SCSI layer could only support a few meters of distance - not nearly enough to ensure business continuance in a disaster. Demand for this SAN application has increased dramatically after the September 11th attacks in the United States, and increased regulatory requirements associated with Sarbanes-Oxley and similar legislation.

Consolidation of disk arrays economically accelerated advancement of some of their advanced features. Those include I/O caching, snapshotting, volume cloning (Business Continuance Volumes or BCVs).

SAN infrastructure

----------------------------------------------

SANs often utilize a Fibre Channel fabric topology - an infrastructure specially designed to handle storage communications. It provides faster and more reliable access than higher-level protocols used in NAS. A fabric is similar in concept to a network segment in a local area network. A typical Fibre Channel SAN fabric is made up of a number of Fibre Channel switches.

Today, all major SAN equipment vendors also offer some form of Fibre Channel routing solution, and these bring substantial scalability benefits to the SAN architecture by allowing data to cross between different fabrics without merging them. These offerings use proprietary protocol elements, and the top-level architectures being promoted are radically different. They often enable mapping Fibre Channel traffic over IP or over SONET/SDH.

Compatibility

----------------------------------------------

One of the early problems with Fibre Channel SANs was that the switches and other hardware from different manufacturers were not entirely compatible. Although the basic storage protocols FCP were always quite standard, some of the higher-level functions did not interoperate well. Similarly, many host operating systems would react badly to other operating systems sharing the same fabric. Many solutions were pushed to the market before standards were finalized and vendors innovated around the standards.

The combined efforts of the members of the Storage Networking Industry Association (SNIA) improved the situation during 2002 and 2003. Today most vendor devices, from HBAs to switches and arrays, interoperate nicely, though there are still many high-level functions that do not work between different manufacturers’ hardware.

SANs at home

----------------------------------------------

SANs are primarily used in large scale, high performance enterprise storage operations. It would be unusual to find a single disk drive connected directly to a SAN. Instead, SANs are normally networks of large disk arrays. SAN equipment is relatively expensive, therefore, Fibre Channel host bus adapters are rare in desktop computers. The iSCSI SAN technology is expected to eventually produce cheap SANs, but it is unlikely that this technology will be used outside the enterprise data center environment. Desktop clients are expected to continue using NAS protocols such as CIFS and NFS. The exception to this may be remote storage replication.

SANs in the Media and Entertainment

----------------------------------------------

Video editing workgroups require very high data rates. Outside of the enterprise market, this is one area that greatly benefits from SANs.

Per-node bandwidth usage control, sometimes referred to as quality-of-service (QoS), is especially important in video workgroups as it lets you ensure a fair and prioritized bandwidth usage across your network. Avid Unity and Tiger Technology MetaSAN are specifically designed for video networks and offer this functionality.

Storage virtualization and SANs

----------------------------------------------

Storage virtualization refers to the process of completely abstracting logical storage from physical storage. The physical storage resources are aggregated into storage pools, from which the logical storage is created. It presents to the user a logical space for data storage and transparently handles the process of mapping it to the actual physical location. This is of course naturally implemented inside each modern disk array, using vendor's proprietary solution. However, the goal is to virtualize multiple disk arrays, made by different vendors, scattered over the network, into a single monolithic storage device, which can be managed unifromly.

Posted by

pkm

at

7:36 PM

0

comments

![]()

Labels: network, technology

Surface Computing (Microsoft Surface)

Microsoft Surface is a forthcoming product from Microsoft which is developed as a software and hardware combination technology that allows a user, or multiple users, to manipulate digital content by the use of natural motions, hand gestures, or physical objects. It was announced on May 29, 2007 at D5, and is expected to be released by commercial partners in November 2007. Initial customers will be in the hospitality businesses, such as restaurants, hotels, retail, and public entertainment venues.

Overview

-------------------------------------------------

Surface is essentially a Windows Vista PC tucked inside a black table base, topped with a 30-inch touchscreen in a clear acrylic frame. Five cameras that can sense nearby objects are mounted beneath the screen. Users can interact with the machine by touching or dragging their fingertips and objects such as paintbrushes across the screen, or by setting real-world items tagged with special barcode labels on top of it.

Surface has been optimized to respond to 52 touches at a time. During a demonstration with a reporter, Mark Bolger, the Surface Computing group's marketing director, "dipped" his finger in an on-screen paint palette, then dragged it across the screen to draw a smiley face. Then he used all 10 fingers at once to give the face a full head of hair.

In addition to recognizing finger movements, Microsoft Surface can also identify physical objects. Microsoft says that when a diner sets down a wine glass, for example, the table can automatically offer additional wine choices tailored to the dinner being eaten.

Prices will reportedly be $5,000 to $10,000 per unit. However Microsoft said it expects prices to drop enough to make consumer versions feasible in 3 to 5 years.

The machines, which Microsoft debuted May 30, 2007 at a technology conference in Carlsbad, California, are set to arrive in November in T-Mobile USA stores and properties owned by Starwood Hotels & Resorts Worldwide Inc. and Harrah's Entertainment Inc.

History

-------------------------------------------------

The technology behind Surface is called Multi-touch. It has at least a 25-year history, beginning in 1982, with pioneering work being done at the University of Toronto (multi-touch tablets) and Bell Labs (multi-touch screens). The product idea for Surface was initially conceptualized in 2001 by Steven Bathiche of Microsoft Hardware and Andy Wilson of Microsoft Research. In October 2001, a virtual team was formed with Bathiche and Wilson as key members, to bring the idea to the next stage of development.

In 2003, the team presented the idea to the Microsoft Chairman Bill Gates, in a group review. Later, the virtual team was expanded and a prototype nicknamed T1 was produced within a month. The prototype was based on an IKEA table with a hole cut in the top and a sheet of architect vellum used as a diffuser. The team also developed some applications, including pinball, a photo browser and a video puzzle. Over the next year, Microsoft built more than 85 early prototypes for Surface. The final hardware design was completed in 2005.

A similar concept was used in the 2005 Science Fiction movie The Island, by Sean Bean's character "Merrick". As noted in the DVD commentary, the director Michael Bay stated the concept of the device came from consultation with Microsoft during the making of the movie. One of the film's technology consultant's associates from MIT later joined Microsoft to work on the Surface project.

Surface was unveiled by Microsoft CEO Steve Ballmer on May 29, 2007 at The Wall Street Journal's D: All Things Digital conference in Carlsbad, California.Surface Computing is part of Microsoft's Productivity and Extended Consumer Experiences Group, which is within the Entertainment & Devices division. The first few companies to deploy Surface will include Harrah's Entertainment, Starwood Hotels & Resorts Worldwide, T-Mobile and a distributor, International Game Technology.

Features

------------------------------------------------- Microsoft notes four main components being important in Surface's interface: direct interaction, multi-touch contact, a multi-user experience, and object recognition. The device also enables drag and drop digital media when wi-fi enabled devices are placed on its surface such as a Microsoft Zune, cellular phones, or digital cameras.

Microsoft notes four main components being important in Surface's interface: direct interaction, multi-touch contact, a multi-user experience, and object recognition. The device also enables drag and drop digital media when wi-fi enabled devices are placed on its surface such as a Microsoft Zune, cellular phones, or digital cameras.

Surface features multi-touch technology that allows a user to interact with the device at more than one point of contact. For example, using all of their fingers to make a drawing instead of just one. As an extension of this, multiple users can interact with the device at once.

The technology allows non-digital objects to be used as input devices. In one example, a normal paint brush was used to create a digital painting in the software. This is made possible by the fact that, in using cameras for input, the system does not rely on restrictive properties required of conventional touchscreen or touchpad devices such as the capacitance, electrical resistance, or temperature of the tool used (see Touchscreen).

The computer's "vision" is created by a near-infrared, 850-nanometer-wavelength LED light source aimed at the surface. When an object touches the tabletop, the light is reflected to multiple infrared cameras with a net resolution of 1280 x 960, allowing it to sense, and react to items touching the tabletop.

Surface will ship with basic applications, including photos, music, virtual concierge, and games, that can be customized for the customers.

Specifications

-------------------------------------------------

Surface is a 30-inch (76 cm) display in a table-like form factor, 22 inches (56 cm) high, 21 inches (106 cm) deep, and 84 inches (214 cm) wide. The Surface tabletop is acrylic, and its interior frame is powder-coated steel. The software platform runs on Windows Vista and has wired Ethernet 10/100, wireless 802.11 b/g, and Bluetooth 2.0 connectivity.

Read More......

Posted by

pkm

at

2:17 AM

0

comments

![]()

Labels: technology

Electronic Product Code

The Electronic Product Code, (EPC), is a family of coding schemes created as an eventual successor to the bar code. The EPC was created as a low-cost method of tracking goods using RFID technology. It is designed to meet the needs of various industries, while guaranteeing uniqueness for all EPC-compliant tags. EPC tags were designed to identify each item manufactured, as opposed to just the manufacturer and class of products, as bar codes do today. The EPC accommodates existing coding schemes and defines new schemes where necessary.

The EPC was the creation of the MIT Auto-ID Center, a consortium of over 120 global corporations and university labs. The EPC system is currently managed by EPCglobal, Inc., a subsidiary of GS1, creators of the UPC barcode.

The Electronic Product Code promises to become the standard for global RFID usage, and a core element of the proposed EPCglobal Network.

Structure

--------------------------------

All EPC numbers contain a header identifying the encoding scheme that has been used. This in turn dictates the length, type and structure of the EPC. EPC encoding schemes frequently contain a serial number which can be used to uniquely identify one object.

EPC Version 1.3 supports the following coding schemes:

-General Identifier (GID) GID-96

-a serialized version of the GS1 Global Trade Item Number (GTIN) SGTIN-96 SGTIN-198

-GS1 Serial Shipping Container Code (SSCC) SSCC-96

-GS1 Global Location Number (GLN), SGLN-96 SGLN-195

-GS1 Global Returnable Asset Identifier (GRAI) GRAI-96 GRAI-170

-GS1 Global Individual Asset Identifier (GIAI) GIAI-96 GIAI-202 and

-DOD Construct DoD-96

From Wikipedia, the free encyclopedia

Posted by

pkm

at

2:11 AM

0

comments

![]()

Thursday, September 6, 2007

Near Field Communication

Near Field Communication or NFC, is a short-range wireless technology which enables the communication between devices over a short distance (hands width). The technology is primarily aimed at usage in mobile phones.

NFC is compatible with the existing contactless infrastructure already in use for public transportation and payment.

Essential specifications

--------------------------------------------

-Works by magnetic field induction. It operates within the globally available and unlicensed RF band of 13.56 MHz.

-Working distance: 0-20 centimeters

-Speed: 106 kbit/s, 212 kbit/s or 424 kbit/s

-There are two modes:

-Passive Communication Mode: The Initiator device provides a carrier field andthe target device answers by modulating existing field. In this mode, the Target device may draw its operating power from the Initiator-provided electromagnetic field, thus making the Target device a transponder.

-Active Communication Mode: Both Initiator and Target device communicate by generating their own field. In this mode, both devices typically need to have a power supply.

-NFC can be used to configure and initiate other wireless network connections such as Bluetooth, Wi-Fi or Ultra-wideband.

A patent licensing program for NFC is currently under development by Via Licensing Corporation, an independent subsidiary of Dolby Laboratories.

Uses and applications

--------------------------------------------

NFC technology is currently mainly aimed at being used with mobile phones. There are three main use cases for NFC:

-card emulation: the NFC device behaves like an existing contactless card)

-reader mode: the NFC device is active and read a passive RFID tag, for example for interactive advertising)

-P2P mode: two NFC devices are communicating together and exchanging information.)

Plenty of applications will be possible such as:

-Mobile ticketing in public transport - an extension of the existing contactless infrastructure.

-Mobile Payment - the mobile phone acts as a debit/ credit payment card.

-Smart poster - the mobile phone is used to read RFID tags on outdoor billboards in -order to get info on the move.