High-definition television (HDTV) is a digital television broadcasting system with a significantly higher resolution than traditional formats (NTSC, SECAM, PAL). While some early analog HDTV formats were broadcast in Europe and Japan, HDTV is usually broadcast digitally, because digital television (DTV) broadcasting requires much less bandwidth if it uses enough video compression. HDTV technology was first introduced in the US during the 1990s by a group of electronics companies called the Digital HDTV Grand Alliance.

History

--------------------------------------------

High-Definition television was first developed by Nippon Hōsō Kyōkai, and was unveiled in 1969. However, the system did not become mainstream until the late 1990s.

In the early 2000s, a number of high-definition television standards were competing for the still-developing niche markets.

Three HDTV standards are currently defined by the International Telecommunication Union (ITU-R BT.709). They include 1080i (1,080 actively interlaced lines), 1080p (1,080 progressively scanned lines), and 720p (720 progressively scanned lines). All standards use a 16:9 aspect ratio, leading many consumers to the incorrect conclusion of equating widescreen television with HDTV. All current HDTV broadcasting standards are encompassed within the ATSC and DVB specifications.

HDTV is also capable of "theater-quality" audio because it uses the Dolby Digital (AC-3) format to support "5.1" surround sound. It should be noted that while HDTV is more like a theater in quality than conventional television, 35 mm and 70 mm film projectors used in theaters still have the highest resolution and best viewing quality on very large screens. Many HDTV programs are produced from movies on film as well as content shot in HD video.

The term "high-definition" can refer to the resolution specifications themselves, or more loosely to media capable of similar sharpness, such as photographic film and digital video. As of July 2007, HDTV saturation in the US has reached 30 percent – in other words, three out of every ten American households own at least one HDTV. However, only 44 percent of those that do own an HDTV are actually receiving HDTV programming, as many consumers are not aware that they must obtain special receivers to receive HDTV from cable or satellite, or use ATSC tuners to receive over-the-air broadcasts; others may not even know what HDTV is.

HDTV Sources

--------------------------------------------

The rise in popularity of large screens and projectors has made the limitations of conventional Standard Definition TV (SDTV) increasingly evident. An HDTV compatible television set will not improve the quality of SDTV channels. To get a better picture HDTV televisions require a High Definition (HD) signal. Typical sources of HD signals are as follows:

-Over the air with an antenna. Most cities in the US with major network affiliates broadcast over the air in HD. To receive this signal an HD tuner is required. Most newer HDTV televisions have a HD tuner built in. For HDTV televisions without a built in HD tuner, a separate set-top HD tuner box can be rented from a cable or satellite company or purchased.

-Cable television companies often offer HDTV broadcasts as part of their digital broadcast service. This is usually done with a set-top box or CableCARD issued by the cable company. Alternatively one can usually get the network HDTV channels for free with basic cable by using a QAM tuner built into their HDTV or set-top box. Some cable carriers also offer HDTV on-demand playback of movies and commonly viewed shows.

-Satellite-based TV companies, such as Optimum, DirecTV, Sky Digital, Virgin Media (in the UK and Ireland) and Dish Network, offer HDTV to customers as an upgrade. New satellite receiver boxes and a new satellite dish are often required to receive HD content.

-Video game systems, such as the Xbox (NTSC only), Xbox 360, and Playstation 3, can output an HD signal.

-Two optical disc standards, Blu-ray and HD DVD, can provide enough digital storage to store hours of HD video content.

Notation

--------------------------------------------

In the context of HDTV, the formats of the broadcasts are referred to using a notation describing:

-The number of lines in the vertical display resolution.

-Whether progressive scan (p) or interlaced scan (i) are used. Progressive scan redraws all the lines (a frame) of a picture in each refresh. Interlaced scan redraws every second line (a field) in one refresh and the remaining lines in a second refresh. Interlaced scan increases picture resolution while saving bandwidth but at the expense of some flicker or other artifacts.

-The number of frames or fields per second.

The format 720p60 is 1280 × 720 pixels, progressive encoding with 60 frames per second (60 Hz). The format 1080i50 is 1920 × 1080 pixels, interlaced encoding with 50 fields (25 frames) per second. Often the frame or field rate is left out, indicating only the resolution and type of the frames or fields, and leading to confusion. Sometimes the rate is to be inferred from the context, in which case it can usually be assumed to be either 50 or 60, except for 1080p which is often used to denote either 1080p24, 1080p25 or 1080p30 at present but will also denote 1080p50 and 1080p60 in the future.

A frame or field rate can also be specified without a resolution. For example 24p means 24 progressive scan frames per second and 50i means 25 interlaced frames per second, consisting of 50 interlaced fields per second. Most HDTV systems support some standard resolutions and frame or field rates. The most common are noted below.

Changes in notation

The terminology described above was invented for digital systems in the 1990s. A digital signal encodes the color of each pixel, or dot on the screen as a series of numbers. Before that, analog TV signals encoded values for one monochrome, or three-color signals as they scanned a screen continuously from line to line. By comparison, radio encodes an analog signal of the sound to be sent to an amplified speaker, typically up to 20 kHz, but video signals are in the MHz range, which is why they are much higher in the broadcast spectrum than audio radio. Analog video signals have no true "pixels" to measure horizontal resolution. The vertical scan-line count included off-screen scan lines with no picture information while the CRT beam returned to the top of the screen to begin another field. Thus NTSC was considered to have "525 lines" even though only 486 of them had a picture (625/576 for PAL). Similarly the Japanese MUSE system was called "1125 line", but is only 1035i by today's measuring standards. This change was made because digital systems have no need of blank retrace lines unless the signal was converted to analog to drive a CRT.

Standard resolutions

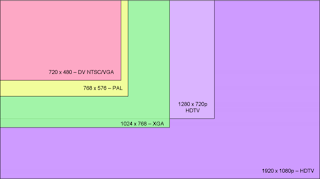

When resolution is considered, both the resolution of the transmitted signal and the (native) displayed resolution of a TV set are taken into account. Digital NTSC- and PAL/SECAM-like signals (480i60 and 576i50 respectively) are transmitted at a horizontal resolution of 720 or 704 "pixels". However these transmitted DTV "pixels" are not square, and have to be stretched for correct viewing. PAL TV sets with an aspect ratio of 4:3 use a fixed pixel grid of 768 × 576 or 720 × 540; with an aspect ratio of 16:9 they use 1440 x 768, 1024 × 576 or 960 × 540; NTSC ones use 640 × 480 and 852 × 480 or, seldom, 720 × 540. High Definition usually refers to one million pixels or more.

In Australia, the 576p50 format is also considered a HDTV format, as it has doubled temporal resolution though the use of progressive scanning. Thus, a number of Australian networks broadcast a 576p signal as their High-definition DVB-T signal, while others use the more conventional 720p and 1080i formats. Technically, however, the 576p format is defined as Enhanced-definition television.

Standard frame or field rates

23.977p (allow easy conversion to NTSC)

24p (cinematic film)

25p (PAL, SECAM DTV progressive material)

30p (NTSC DTV progressive material)

50p (PAL, SECAM DTV progressive material)

60p (NTSC DTV progressive material)

50i (PAL & SECAM)

60i (NTSC, PAL-M)

Comparison with SDTV

HDTV has at least twice the linear resolution of standard-definition television (SDTV), thus allowing much more detail to be shown compared with analog television or regular DVD. In addition, the technical standards for broadcasting HDTV are also able to handle 16:9 aspect ratio pictures without using letterboxing or anamorphic stretching, thus further increasing the effective resolution for such content.

Format considerations

--------------------------------------------

The optimum formats for a broadcast depends on the type of media used for the recording and the characteristics of the content. The field and frame rate should match the source, as should the resolution. On the other hand, a very high resolution source may require more bandwidth than is available in order to be transmitted without loss of fidelity. The lossy compression that is used in all digital HDTV storage/transmission systems will then cause the received picture to appear distorted when compared to the uncompressed source.

Photographic film destined for the theater typically has a high resolution and is photographed at 24 frames per second. Depending on the available bandwidth and the amount of detail and movement in the picture, the optimum format for video transfer is thus either 720p24 or 1080p24. When shown on television in countries using PAL, film must be converted to 25 frames per second by speeding it up by 4.1 percent. In countries using the NTSC standard, 30 frames per second, a technique called 3:2 pulldown is used. One film frame is held for three video fields, (1/20 of a second) and then the next is held for two video fields (1/30 of a second) and then the process repeats, thus achieving the correct film rate with two film frames shown in 1/12 of a second.

See also: Telecine

Older (pre-HDTV) recordings on video tape such as Betacam SP are often either in the form 480i60 or 576i50. These may be upconverted to a higher resolution format (720i), but removing the interlace to match the common 720p format may distort the picture or require filtering which actually reduces the resolution of the final output.

See also: Deinterlacing

Non-cinematic HDTV video recordings are recorded in either 720p or 1080i format. The format used depends on the broadcast company (if destined for television broadcast); however, in other scenarios the format choice will vary depending on a variety of factors. In general, 720p is more appropriate for fast action as it uses progressive scan frames, as opposed to 1080i which uses interlaced fields and thus can have a degradation of image quality with fast motion.

In addition, 720p is used more often with Internet distribution of HD video, as all computer monitors are progressive, and most graphics cards do a poor job of de-interlacing video in real time. 720p video also has lower storage and decoding requirements than 1080i or 1080p.

In North America, Fox, My Network TV (also owned by Fox), ABC, and ESPN (ABC and ESPN are both owned by Disney) currently broadcast 720p content. NBC, Universal HD (both owned by General Electric), CBS, PBS, The CW, HBO, Showtime, Starz!, MOJO HD, HDNet ,TNT, and Discovery HD Theater currently broadcast 1080i content.

In the United Kingdom on Sky Digital, there are BBC HD, Sky One HD, Sky Arts HD, Sky Movies HD1 & 2, Sky Sports HD1,2 & X, Discovery HD, National Geographic Channel HD, The History Channel HD & Sky Box Office HD1 & 2. With MTV HD, FX HD, Living HD Rush HD, Ultra HD & Eurosport HD to come in the near future. BBC HD is also available on Virgin Media

Technical details

--------------------------------------------

MPEG-2 is most commonly used as the compression codec for digital HDTV broadcasts. Although MPEG-2 supports up to 4:2:2 YCbCr chroma subsampling and 10-bit quantization, HD broadcasts use 4:2:0 and 8-bit quantization to save bandwidth. Some broadcasters also plan to use MPEG-4 AVC, such as the BBC which is trialing such a system via satellite broadcast, which will save considerable bandwidth compared to MPEG-2 systems. Some German broadcasters already use MPEG-4 AVC together with DVB-S2 (Pro 7, Sat.1 and Premiere). Although MPEG-2 is more widely used at present, it seems likely that in the future all European HDTV may be MPEG-4 AVC, and Ireland and Norway, which have not yet begun any digital television broadcasts, are considering MPEG-4 AVC for SD Digital as well as HDTV on terrestrial broadcasts.

HDTV is capable of "theater-quality" audio because it uses the Dolby Digital (AC-3) format to support "5.1" surround sound. The pixel aspect ratio of native HD signals is a "square" 1.0, in which each pixel's height equals its width. New HD compression and recording formats such as HDV use rectangular pixels to save bandwidth and to open HDTV acquisition for the consumer market. For more technical details see the articles on HDV, ATSC, DVB, and ISDB.

Television studios as well as production and distribution facilities, use HD-SDI SMPTE 292M interconnect standard (a nominally 1.485 Gbit/s, 75-ohm serial digital interface) to route uncompressed HDTV signals. The native bitrate of HDTV formats cannot be supported by 6-8 MHz standard-definition television channels for over-the-air broadcast and consumer distribution media, hence the widespread use of compression in consumer applications. SMPTE 292M interconnects are generally unavailable in consumer equipment, partially due to the expense involved in supporting this format, and partially because consumer electronics manufacturers are required (typically by licensing agreements) to provide encrypted digital outputs on consumer video equipment, for fear that this would aggravate the issue of video piracy.

Newer dual-link HD-SDI signals are needed for the latest 4:4:4 camera systems (Sony HDC-F950 & Thomson Viper), where one link/coax cable contains the 4:2:2 YCbCr info and the other link/coax cable contains the additional 0:2:2 CbCr information.

Advantages of HDTV expressed in non-engineering terms

--------------------------------------------

High-definition television (HDTV) potentially offers a much better picture quality than standard television. HD's greater clarity means the picture on screen can be less blurred and less fuzzy. HD also brings other benefits such as smoother motion, richer and more natural colors, surround sound, and the ability to allow a variety of input devices to work together. However, there are a variety of reasons why the best HD quality is not usually achieved. The main problem is a lack of HD input. Many cable and satellite channels and even some "high definition" channels are not broadcast in true HD. Also, image quality may be lost if the television is not properly connected to the input device or not properly configured for the input's optimal performance.

Almost all commercially available HD is digital, so the system cannot produce a snowy or washed out image from a weak signal, effects from signal interference, such as herringbone patterns, or vertical rolling. HD digital signals will either deliver an excellent picture, a picture with noticeable pixelation, a series of still pictures, or no picture at all. Any interference will render the signal unwatchable. As opposed to a lower-quality signal one gets from interference in an analogue television broadcast, interference in a digital television broadcast will freeze, skip, or display "garbage" information.

With HDTV the lack of imperfections in the television screen often seen on traditional television is another reason why many prefer high definition to analog. As mentioned, problems such as snow caused from a weak signal, double images from ghosting or multi-path and picture sparkles from electromagnetic interference are a thing of the past. These problems often seen on a conventional television broadcast just do not occur on HDTV.

HD programming and films will be presented in 16:9 widescreen format (although films created in even wider ratios will still display "letterbox" bars on the top and bottom of even 16:9 sets). Older films and programming that retain their 4:3 ratio display will be presented in a version of letterbox commonly called "pillar box," displaying bars on the right and left of 16:9 sets (rendering the term "fullscreen" a misnomer). While this is an advantage when it comes to playing 16:9 movies, it creates the same disadvantage when playing 4:3 television shows that standard televisions have playing 16:9 movies. A way to address this is to zoom the 4:3 image to fill the screen or reframe its material to 14:9 aspect ratio, either during preproduction or manually in the TV set.

The colors will generally look more realistic, due to their greater bandwidth. The visual information is about 2-5 times more detailed overall. The gaps between scanning lines are smaller or invisible. Legacy TV content that was shot and preserved on 35 mm film can now be viewed at nearly the same resolution as that at which it was originally photographed. A good analogy for television quality is looking through a window. HDTV offers a degree of clarity that is much closer to this.

The "i" in these numbers stands for "interlaced" while the "p" stands for "progressive". With interlaced scan, the 1,080 lines are split into two, the first 540 being "painted" on a frame, followed by the second 540 painted on another frame. This method reduces the bandwidth and raises the frame rate to 50-60 per second. A progressive scan displays all 1,080 lines at the same time at 60 frames per second, using more bandwidth. (See: An explanation of HDTV numbers and laymens glossary)

Dolby Digital 5.1 surround sound is broadcast along with standard HDTV video signals, allowing full surround sound capabilities. (Standard broadcast television signals usually only include monophonic or stereophonic audio. Stereo broadcasts can be encoded with Dolby Surround, an early home video surround format.) Both designs make more efficient use of electricity than SDTV designs of equivalent size, which can mean lower operating costs. LCD is a leader in energy conservation.

Disadvantages of HDTV expressed in non-engineering terms

--------------------------------------------

HDTV is the answer to a question few consumers were asking. Viewers will have to upgrade their TVs in order to see HDTV broadcasts, incurring household expense in the process. Adding a new aspect ratio makes for consumer confusion when their display is capable of one or more ratios but must be switched to the correct one by the user. Traditional standard definition TV shows, when displayed correctly on an HDTV monitor, will have empty display areas to the left and right of the image. Many consumers aren't satisfied with this unused display area and choose instead to distort their standard definition shows by stretching them horizontally to fill the screen, giving everything a too-wide or not-tall-enough appearance. Alternately, they'll choose to zoom the image which removes content that was on the top and bottom of the original TV show.

Early systems

--------------------------------------------

The term "high definition" was used to describe the electronic television systems of the late 1930s and 1940s beginning with the former British 405-line black-and-white system, introduced in 1936; however, this and the subsequent 525-line U.S. NTSC system, established in 1941, were high definition only in comparison with previous mechanical and electronic television systems, and NTSC, along with the later European 625-line PAL and SECAMs, is described as standard definition today.

On the other hand, the 819-line French black-and-white television system introduced after World War II arguably was high definition in the modern sense, as it had a line count and theoretical maximum resolution considerably higher than those of the 625-line systems introduced across most of postwar Europe. However, it required far more bandwidth than other systems, and was switched off in 1986, a year after the final British 405-line broadcasts.

Japan was the only country where commercial analog HDTV was launched and had some success. In other places, such as Europe, analog (HD-MAC) HDTV failed. Finally, although the United States experimented with analog HDTV (there were about 10 proposed formats), it soon moved towards a digital approach.

Thursday, August 30, 2007

High-definition television

Posted by

pkm

at

2:43 PM

0

comments

![]()

Tuesday, August 28, 2007

Ultra-wideband

Ultra-wideband (UWB, ultra-wide band, ultraband, etc.) is a radio technology that can be used for short-range high-bandwidth communications by using a large portion of the radio spectrum in a way that doesn't interfere with other more traditional 'narrow band' uses. It also has applications in radar imaging , precision positioning and tracking technology.

Ultra-Wideband (UWB) may be used to refer to any radio technology having bandwidth exceeding the lesser of 500 MHz or 20% of the arithmetic center frequency, according to Federal Communications Commission (FCC). This article discusses the meaning of Ultra-wideband in the field of radio communications.

Overview

------------------------------------------

Ultra-Wideband (UWB) is a technology for transmitting information spread over a large bandwidth (>500 MHz) that should, in theory and under the right circumstances, be able to share spectrum with other users. A February 14, 2002 Report and Order by the FCC authorizes the unlicensed use of UWB in 3.1–10.6 GHz. This is intended to provide an efficient use of scarce radio bandwidth while enabling both high data rate personal-area network (PAN) wireless connectivity and longer-range, low data rate applications as well as radar and imaging systems. More than four dozen devices have been certified under the FCC UWB rules, the vast majority of which are radar, imaging or positioning systems. Deliberations in the International Telecommunication Union Radiocommunication Sector (ITU-R) have resulted in a Report and Recommendation on UWB in November of 2005. National jurisdictions around the globe are expected to act on national regulations for UWB very soon. The UK regulator Ofcom announced a similar decision on 9 August 2007.

Ultra Wideband was traditionally accepted as pulse radio, but the FCC and ITU-R now define UWB in terms of a transmission from an antenna for which the emitted signal bandwidth exceeds the lesser of 500 MHz or 20% of the center frequency. Thus, pulse-based systems—wherein each transmitted pulse instantaneously occupies the UWB bandwidth, or an aggregation of at least 500 MHz worth of narrow band carriers, for example in orthogonal frequency-division multiplexing (OFDM) fashion—can gain access to the UWB spectrum under the rules. Pulse repetition rates may be either low or very high. Pulse-based radars and imaging systems tend to use low repetition rates, typically in the range of 1 to 100 megapulses per second. On the other hand, communications systems favor high repetition rates, typically in the range of 1 to 2 giga-pulses per second, thus enabling short-range gigabit-per-second communications systems. Each pulse in a pulse-based UWB system occupies the entire UWB bandwidth, thus reaping the benefits of relative immunity to multipath fading (but not to intersymbol interference), unlike carrier-based systems that are subject to both deep fades and intersymbol interference.

The FCC power spectral density emission limit for UWB emitters operating in the UWB band is -41.3 dBm/MHz. This is the same limit that applies to unintentional emitters in the UWB band, the so called Part 15 limit. However, the emission limit for UWB emitters can be significantly lower (as low as -75 dBm/MHz) in other segments of the spectrum.

A significant difference between traditional radio transmissions and UWB radio transmissions is that traditional transmissions transmit information by varying the power/frequency/and or phase of a sinusoidal wave. UWB transmissions can transmit information by generating radio energy at specific time instants and occupying large bandwidth thus enabling a pulse-position or time-modulation. But also information can be imparted (modulated) on UWB signals (pulses) by encoding the polarity of the pulse, the amplitude of the pulse, and/or also by using orthogonal pulses. UWB pulses can be sent sporadically at relatively low pulse rates to support time/position modulation, but can also be sent at rates up to the inverse of the UWB pulse bandwidth. Pulse-UWB systems have been demonstrated at channel pulse rates in excess of 1.3 giga-pulses per second using a continuous stream of UWB pulses (Continuous Pulse UWB or "C-UWB"), supporting forward error correction encoded data rates in excess of 675 Mbit/s. Such a pulse-based UWB method using bursts of pulses is the basis of the IEEE 802.15.4a draft standard and working group, which has proposed UWB as an alternative PHY layer.

One of the valuable aspects of UWB radio technology is the ability for a UWB radio system to determine "time of flight" of the direct path of the radio transmission between the transmitter and receiver to a high resolution. With a two-way time transfer technique distances can be measured to high resolution as well as to high accuracy by compensating for local clock drifts and inaccuracies.

Another valuable aspect of pulse-based UWB is that the pulses are very short in space (less than 60 cm for a 500 MHz wide pulse, less than 23 cm for a 1.3 GHz bandwidth pulse), so most signal reflections do not overlap the original pulse, and thus the traditional multipath fading of narrow band signals does not exist. However, there still is inter-pulse interference for fast pulse systems which can be mitigated by coding techniques.

Technical discussion

------------------------------------------

One performance measure of a radio in applications like communication, positioning, location, tracking, radar, is the channel capacity for a given bandwidth and signaling format. Channel capacity is the theoretical maximum possible number of bits per second of information that can be conveyed through one or more links in an area. According to the Shannon-Hartley theorem, channel capacity of a properly encoded signal is proportional to the bandwidth of the channel and to the logarithm of signal to noise ratio (SNR)—assuming the noise is additive white Gaussian noise (AWGN). Thus channel capacity increases linearly by increasing bandwidth of the channel to the maximum value available, or equivalently in a fixed channel bandwidth by increasing the signal power exponentially. By virtue of the huge bandwidths inherent to UWB systems, the huge channel capacities can be achieved without invoking higher order modulations that need very high SNR to operate.

Ideally, the receiver signal detector should be matched to the transmitted signal in bandwidth, signal shape and time. Any mismatch results in loss of margin for the UWB radio link.

Channelization (sharing the channel with other links) is a complex problem subject to many practical variables. Typically two UWB links can share the same spectrum by using orthogonal time-hopping codes for pulse-position (time-modulated) systems, or orthogonal pulses and orthogonal codes for fast-pulse based systems.

Current forward error correction (FEC) technology; as demonstrated recently in some very high data rate UWB pulsed systems, like LDPC (Low Density Parity Coding), perhaps in combination with Reed-Solomon codes, can provide channel performance approaching the Shannon limit. When stealth is required, some UWB formats (mainly pulse-based) can fairly easily be made to look like nothing more than a slight rise in background noise to any receiver that is unaware of the signal’s complex pattern.

Multipath (distortion of a signal because it takes many different paths to the receiver) is an enemy of narrow-band radio--it causes fading where wave interference is destructive. Some UWB systems use "rake" receiver techniques to recover multipath generated copies of the original pulse to improve performance on receiver. Other UWB systems use channel equalization techniques to achieve the same purpose. Narrowband receivers can use similar techniques, but are limited due to the poorer resolution capabilities of narrowband systems.

There has been much concern over the interference of narrow band signals and UWB signals that share the same spectrum; traditionally the only radio technology that operated using pulses was spark gap transmitters; which were banned due to excessive interference. However, UWB is much lower power. The subject was extensively covered in the proceedings that led to the adoption of the FCC rules in the US, and also in the 6 meetings relating to UWB of the ITU-R that led to the ITU-R Report and Recommendations on UWB technology. In particular, many common pieces of equipment emit impulsive noise (notably hair dryers) and the argument was successfully made that the noise floor would not be raised excessively by wider deployment of wideband transmitters of low power.

Applications

------------------------------------------

Due to the extremely low emission levels currently allowed by regulatory agencies, UWB systems tend to be short-range and indoors. However, due to the short duration of the UWB pulses, it is easier to engineer extremely high data rates, and data rate can be readily traded for range by simply aggregating pulse energy per data bit using either simple integration or by coding techniques. Conventional OFDM technology can also be used subject to the minimum bandwidth requirement of the regulations. High data rate UWB can enable wireless monitors, the efficient transfer of data from digital camcorders, wireless printing of digital pictures from a camera without the need for an intervening personal computer, and the transfer of files among cell phone handsets and other handheld devices like personal digital audio and video players.

UWB is used as a part of location systems and real time location systems. The precision capabilities combined with the very low power makes it ideal for certain radio frequency sensitive environments such as hospitals and healthcare. Another benefit of UWB is the short broadcast time which enables implementers of the technology to install orders of magnitude more transmitter tags in an environment relative to competitive technologies. USA based Parco Merged Media Corporation was the first systems developer to deploy a commercial version of this system in a Washington, DC hospital.

UWB is also used in "see-through-the-wall" precision radar imaging technology, precision positioning and tracking (using distance measurements between radios), and precision time-of-arrival-based localization approaches. It exhibits excellent efficiency with a spatial capacity of approximately 10,000,000,000,000 bit/s/m².

UWB is a possible technology for use in personal area networks and appears in IEEE 802.15.3a draft PAN standard.

From Wikipedia, the free encyclopedia

Posted by

pkm

at

3:06 PM

0

comments

![]()

Labels: technology

Organic light-emitting diode

An organic light-emitting diode (OLED) is any light-emitting diode (LED) whose emissive electroluminescent layer comprises a film of organic compounds. The layer usually contains a polymer substance that allows suitable organic compounds to be deposited. They are deposited in rows and columns onto a flat carrier by a simple "printing" process. The resulting matrix of pixels can emit light of different colors.

Such systems can be used in television screens, computer displays, portable system screens, advertising, information and indication. OLEDs can also be used in light sources for general space illumination, and large-area light-emitting elements. OLEDs typically emit less light per area than inorganic solid-state based LEDs which are usually designed for use as point-light sources.

A great benefit of OLED displays over traditional liquid crystal displays (LCDs) is that OLEDs do not require a backlight to function. Thus they draw far less power and, when powered from a battery, can operate longer on the same charge. OLED-based display devices also can be more effectively manufactured than LCDs and plasma displays. But degradation of OLED materials has limited the use of these materials. See Drawbacks.

OLED technology was also called Organic Electro-Luminescence (OEL), before the term "OLED" became standard.

History

------------------------------------------

Bernanose and co-workers first produced electroluminescence in organic materials by applying a high-voltage alternating current (AC) field to crystalline thin films of acridine orange and quinacrine. In 1960, researchers at Dow Chemical developed AC-driven electroluminescent cells using doped anthracene.

The low electrical conductivity of such materials limited light output until more conductive organic materials became available, especially the polyacetylene, polypyrrole, and polyaniline "Blacks". In a 1963 series of papers, Weiss et al. first reported high conductivity in iodine-"doped" oxidized polypyrrole.They achieved a conductivity of 1 S/cm. Unfortunately, this discovery was "lost", as was a 1974 report of a melanin-based bistable switch with a high conductivity "ON" state. This material emitted a flash of light when it switched.

In a subsequent 1977 paper, Shirakawa et al. reported high conductivity in similarly oxidized and iodine-doped polyacetylene. Heeger, MacDiarmid & Shirakawa received the 2000 Nobel Prize in Chemistry for "The discovery and development of conductive organic polymers". The Nobel citation made no reference to the earlier discoveries.

Modern work with electroluminescence in such polymers culminated with Burroughs et al. 1990 paper in the journal Nature reporting a very-high-efficiency green-light-emitting polymer. The OLED timeline since 1996 is well documented on oled-info.com site.

Related technologies

------------------------------------------

Small molecules

OLED technology was first developed at Eastman Kodak Company by Dr. Ching Tang using Small-molecules. The production of small-molecule displays requires vacuum deposition, which makes the production process more expensive than other processing techniques (see below). Since this is typically carried out on glass substrates, these displays are also not flexible, though this limitation is not inherent to small-molecule organic materials. The term OLED traditionally refers to this type of device, though some are using the term SM-OLED.

Molecules commonly used in OLEDs include organo-metallic chelates (for example Alq3, used in the first organic light-emitting device) and conjugated dendrimers.

Recently a hybrid light-emitting layer has been developed that uses nonconductive polymers doped with light-emitting, conductive molecules. The polymer is used for its production and mechanical advantages without worrying about optical properties. The small molecules then emit the light and have the same longevity that they have in the SM-OLEDs.

PLED

Polymer light-emitting diodes (PLED) involve an electroluminescent conductive polymer that emits light when subjected to an electric current. Developed by Cambridge Display Technology, they are also known as Light-Emitting Polymers (LEP). They are used as a thin film for full-spectrum color displays and require a relatively small amount of power for the light produced. No vacuum is required, and the emissive materials can be applied on the substrate by a technique derived from commercial inkjet printing. The substrate used can be flexible, such as PET. Thus, flexible PLED displays may be produced inexpensively.

Typical polymers used in PLED displays include derivatives of poly(p-phenylene vinylene) and poly(fluorene). Substitution of side chains onto the polymer backbone may determine the color of emitted light or the stability and solubility of the polymer for performance and ease of processing.

TOLED

Transparent organic light-emitting device (TOLED) uses a proprietary transparent contact to create displays that can be made to be top-only emitting, bottom-only emitting, or both top and bottom emitting (transparent). TOLEDs can greatly improve contrast, making it much easier to view displays in bright sunlight.

SOLED

Stacked OLED (SOLED) uses a novel pixel architecture that is based on stacking the red, green, and blue subpixels on top of one another instead of next to one another as is commonly done in CRTs and LCDs. This improves display resolution up to threefold and enhances full-color quality. 1439

Working principle

An OLED is composed of an emissive layer, a conductive layer, a substrate, and anode and cathode terminals. The layers are made of special organic polymer molecules that conduct electricity. Their levels of conductivity range from those of insulators to those of conductors, and so they are called organic semiconductors.

A voltage is applied across the OLED such that the anode is positive with respect to the cathode. This causes a current of electrons to flow through the device from cathode to anode. Thus, the cathode gives electrons to the emissive layer and the anode withdraws electrons from the conductive layer; in other words, the anode gives electron holes to the conductive layer.

Soon, the emissive layer becomes negatively charged, while the conductive layer becomes rich in positively charged holes. Electrostatic forces bring the electrons and the holes towards each other and recombine. This happens closer to the emissive layer, because in organic semiconductors holes are more mobile than electrons, (unlike in inorganic semiconductors). The recombination causes a drop in the energy levels of electrons, accompanied by an emission of radiation whose frequency is in the visible region. That is why this layer is called emissive.

The device does not work when the anode is put at a negative potential with respect to the cathode. In this condition, holes move to the anode and electrons to the cathode, so they are moving away from each other and do not recombine.

Indium tin oxide is commonly used as the anode material. It is transparent to visible light and has a high work function which promotes injection of holes into the polymer layer. Metals such as aluminium and calcium are often used for the cathode as they have low work functions which promote injection of electrons into the polymer layer.

Advantages

------------------------------------------

The radically different manufacturing process of OLEDs lends itself to many advantages over flat-panel displays made with LCD technology. Since OLEDs can be printed onto any suitable substrate using inkjet printer or even screen printing technologies, they can theoretically have a significantly lower cost than LCDs or plasma displays. Printing OLEDs onto flexible substrates opens the door to new applications such as roll-up displays and displays embedded in clothing.

OLEDs enable a greater range of colors, brightness, and viewing angle than LCDs, because OLED pixels directly emit light. OLED pixel colors appear correct and unshifted, even as the viewing angle approaches 90 degrees from normal. LCDs use a backlight and cannot show true black, while an "off" OLED element produces no light and consumes no power. Energy is also wasted in LCDs because they require polarizers which filter out about half of the light emitted by the backlight. Additionally, color filters in color LCDs filter out two-thirds of the light.

OLEDs also have a faster response time than standard LCD screens. Whereas a standard LCD currently has around 8 millisecond response time(though can be much lower such as 2 miliseconds), an OLED can have less than 0.01ms response time.

Commercial uses

------------------------------------------

OLED technology is used in commercial applications such as small screens for mobile phones and portable digital audio players (MP3 players), car radios, digital cameras and high-resolution microdisplays for head-mounted displays. Such portable applications favor the high light output of OLEDs for readability in sunlight, and their low power drain. Portable displays are also used intermittently, so the lower lifespan of OLEDs is less important here. Prototypes have been made of flexible and rollable displays which use OLED's unique characteristics. OLEDs have been used in most Motorola and Samsung color cell phones, as well as some Sony Ericsson phones, notably the Z610i, and some models of the Sony Walkman.

eMagin Corporation is the only manufacturer of active matrix OLED-on-silicon displays. These are currently being developed for the US military, the medical field and the future of entertainment where an individual can immerse themselves in a movie or a video game.

Posted by

pkm

at

2:46 PM

0

comments

![]()

Labels: technology

Monday, August 27, 2007

VoiceXML

VoiceXML (VXML) is the W3C's standard XML format for specifying interactive voice dialogues between a human and a computer. It allows voice applications to be developed and deployed in an analogous way to HTML for visual applications. Just as HTML documents are interpreted by a visual web browser, VoiceXML documents are interpreted by a voice browser. A common architecture is to deploy banks of voice browsers attached to the public switched telephone network (PSTN) so that users can use a telephone to interact with voice applications.

Usage

-------------------------------

Many commercial VoiceXML applications have been deployed, processing many millions of telephone calls per day. These applications include: order inquiry, package tracking, driving directions, emergency notification, wake-up, flight tracking, voice access to email, customer relationship management, prescription refilling, audio newsmagazines, voice dialing, real-estate information and national directory assistance applications.

VoiceXML has tags that instruct the voice browser to provide speech synthesis, automatic speech recognition, dialog management, and audio playback. The following is an example of a VoiceXML document:

<?xml version="1.0"?>

<vxml version="2.0" xmlns="http://www.w3.org/2001/vxml">

<form>

<block>

<prompt>

Hello world!

</prompt>

</block>

</form>

</vxml>

When interpreted by a VoiceXML interpreter this will output "Hello world" with synthesized speech.

Typically, HTTP is used as the transport protocol for fetching VoiceXML pages. Some applications may use static VoiceXML pages, while others rely on dynamic VoiceXML page generation using an application server like Tomcat, Weblogic, IIS, or WebSphere. In a well-architected web application, the voice interface and the visual interface share the same back-end business logic.

Historically, VoiceXML platform vendors have implemented the standard in different ways, and added proprietary features. But the VoiceXML 2.0 standard, adopted as a W3C Recommendation 16 March 2004, clarified most areas of difference. The VoiceXML Forum, an industry group promoting the use of the standard, provides a conformance testing process that certifies vendors implementations as conformant.

Related standards

-------------------------------

The W3C's Speech Interface Framework also defines these other standards closely associated with VoiceXML.

SRGS and SISR

The Speech Recognition Grammar Specification (SRGS) is used to tell the speech recognizer what sentence patterns it should expect to hear: these patterns are called grammars. Once the speech recognizer determines the most likely sentence it heard, it needs to extract the semantic meaning from that sentence and return it to the VoiceXML interpreter. This semantic interpretation is specified via the Semantic Interpretation for Speech Recognition (SISR) standard. SISR is used inside SRGS to specify the semantic results associated with the grammars, i.e., the set of ECMAScript assignments that create the semantic structure returned by the speech recognizer.

SSML

The Speech Synthesis Markup Language (SSML) is used to decorate textual prompts with information on how best to render them in synthetic speech, for example which speech synthesizer voice to use, when to speak louder or softer.

PLS

The Pronunciation Lexicon Specification (PLS) is used to define how words are pronounced. The generated pronunciation information is meant to be used by both speech recognizers and speech synthesizers in voice browsing applications.

CCXML

The Call Control eXtensible Markup Language (CCXML) is a complementary W3C standard. A CCXML interpreter is used on some VoiceXML platforms to handle the initial call setup between the caller and the voice browser, and to provide telephony services like call transfer and disconnect to the voice browser. CCXML can also be used in non-VoiceXML contexts such as teleconferencing.

History

AT&T, IBM, Lucent, and Motorola formed the VoiceXML Forum in March 1999, in order to develop a standard markup language for specifying voice dialogs. By September 1999 the Forum released VoiceXML 0.9 for member comment, and in March 2000 they published VoiceXML 1.0. Soon afterwards, the Forum turned over the control of the standard to the World Wide Web Consortium. The W3C produced several intermediate versions of VoiceXML 2.0, which reached the final "Recommendation" stage in March 2004.

VoiceXML 2.1 added a relatively small set of additional features to VoiceXML 2.0, based on feedback from implementations of the 2.0 standard. It is backward compatible with VoiceXML 2.0 and reached W3C Recommendation status in June 2007.

Future versions of the standard

VoiceXML 3.0 will be the next major release of VoiceXML, with new major features. It will use a new XML statechart description language called SCXML.

Posted by

pkm

at

10:53 AM

1 comments

![]()

Labels: technology

Sunday, August 26, 2007

LightScribe

LightScribe is an optical disc recording technology that utilizes specially coated recordable CD and DVD media to produce laser-etched labels.

The purpose of LightScribe is to allow users to create direct-to-disc labels (as opposed to stick-on labels), using their optical disc writer. Special discs and a compatible disc writer are required. After burning data to the read-side of the disc, the user simply turns the medium over and inserts it with the label side down. The drive's laser then etches into the label side in such a way that an image is produced.

History

------------------------------------------- LightScribe is a registered trademark of the Hewlett-Packard Development Company, L.P. LightScribe was conceived by an HP engineer (Daryl Anderson) in Corvallis, Oregon, and brought to market through the joint design efforts of HP's imaging and optical storage divisions.

LightScribe is a registered trademark of the Hewlett-Packard Development Company, L.P. LightScribe was conceived by an HP engineer (Daryl Anderson) in Corvallis, Oregon, and brought to market through the joint design efforts of HP's imaging and optical storage divisions.

Mode of operation

-------------------------------------------

The surface of a LightScribe disc is coated with a reactive dye that changes color when it absorbs 780nm infrared laser light. The etched label will show no noticeable fading under exposure to indoor lighting for at least 2 years. Optical media should always be stored in a protective sleeve or case that keeps the data content in the dark and safe from scratches. If properly stored as such, the label should last the life of the discs in real-world application.

LightScribe labels burn in concentric circles, moving outward from the center of the disc. Images with the largest diameters will take longest to burn.

Initially LightScribe was monochromatic, a grey etch on a gold looking surface. From late 2006, LightScribe discs are also available in colors for categorization. The "burning" is still monochromatic, but the backgrounds can now be produced in various colors, under the v1.2 specification.

Currently it's not possible to rewrite a LightScribe label but it's possible to add more content to a label that is already burned.

The center of every LightScribe disc has a special code that allows the drive to know the precise rotational position of the disc. This in combination with the drive hardware allows it to know the precise position from the center outwards, and the disc can be labeled while spinning at high speed using these references. It also serves a secondary purpose: The same disc can be labeled with the same label again, several times. Each successive labeling will darken the blacks and generally produce a better image, and the successive burns will line up perfectly. However it is recommended to use the Control Panel to modify the printing parameters and have images with higher contrast.

Posted by

pkm

at

12:35 PM

0

comments

![]()

Wibree

Wibree is a digital radio technology (intended to become an open standard of wireless communications) designed for ultra low power consumption (button cell batteries) within a short range (10 meters / 30 ft) based around low-cost transceiver microchips in each device.

History

---------------------------------

In 2001, Nokia researchers determined that there were various scenarios that contemporary wireless technologies did not address. To address the problem, Nokia Research Center started the development of a wireless technology adapted from the Bluetooth standard which would provide lower power usage and price while minimizing difference between Bluetooth and the new technology. The results were published in 2004 using the name Bluetooth Low End Extension. After further development with partners, e.g., within EU FP6 project MIMOSA, the technology was released to public in October 2006 with brand name Wibree. After negotiations with Bluetooth SIG members, in June 2007, an agreement was reached to include Wibree in future Bluetooth specification as an ultra-low-power Bluetooth technology.

Technical information

---------------------------------

Wibree is designed to work side-by-side with and complement Bluetooth. It operates in 2.4 GHz ISM band with physical layer bit rate of 1 Mbit/s. Main applications include devices such as wrist watches, wireless keyboards, toys and sports sensors where low power consumption is a key design requirement. The technology was announced on 3 October 2006 by Nokia . Partners that currently license the technology and cooperate in defining the specification are Nordic Semiconductor, Broadcom Corporation, CSR and Epson. Other contributors are Suunto and Taiyo Yuden.

Wibree is not designed to replace Bluetooth, but rather to complement the technology in supported devices. Wibree-enabled devices will be smaller and more energy-efficient than their Bluetooth counterparts. This is especially important in devices such as wristwatches, where Bluetooth models may be too large and heavy to be comfortable. Replacing Bluetooth with Wibree will make the devices closer in dimensions and weight to current standard wristwatches.

Bob Iannucci, head of Nokia's Research Centre, claims the technology is up to ten times more efficient than Bluetooth. Reportedly, it will have an output power around -6 dBm. Nordic Semiconductor is aiming to sample Wibree chips during the second half of 2007.

From Wikipedia, the free encyclopedia

Posted by

pkm

at

12:26 PM

0

comments

![]()

Saturday, August 25, 2007

Radio-frequency identification

Radio-frequency identification (RFID) is an automatic identification method, relying on storing and remotely retrieving data using devices called RFID tags or transponders.

An RFID tag is an object that can be stuck on or incorporated into a product, animal, or person for the purpose of identification using radiowaves. Some tags can be read from several meters away and beyond the line of sight of the reader.

Most RFID tags contain at least two parts. One is an integrated circuit for storing and processing information, modulating and demodulating a (RF) signal and perhaps other specialized functions. The second is an antenna for receiving and transmitting the signal. A technology called chipless RFID allows for discrete identification of tags without an integrated circuit, thereby allowing tags to be printed directly onto assets at lower cost than traditional tags.

Today, a significant thrust in RFID use is in enterprise supply chain management, improving the efficiency of inventory tracking and management. However, a threat is looming that the current growth and adoption in enterprise supply chain market will not be sustainable. A fair cost-sharing mechanism, rational motives and justified returns from RFID technology investments are the key ingredients to achieve long-term and sustainable RFID technology adoption.

History of RFID tags

-------------------------------------------------

In 1946 Léon Theremin invented an espionage tool for the Soviet Union which retransmitted incident radio waves with audio information. Sound waves vibrated a diaphragm which slightly altered the shape of the resonator, which modulated the reflected radio frequency. Even though this device was a passive covert listening device, not an identification tag, it has been attributed as the first known device and a predecessor to RFID technology. The technology used in RFID has been around since the early 1920s according to one source (although the same source states that RFID systems have been around just since the late 1960s).

A more similar technology such as the IFF transponder was invented by the British in 1939 was routinely used by the allies in World War II to identify airplanes as friend or foe. Transponders are still used by military and commercial aircraft to this day.

Another early work exploring RFID is the landmark 1948 paper by Harry Stockman, titled "Communication by Means of Reflected Power" (Proceedings of the IRE, pp 1196–1204, October 1948). Stockman predicted that "…considerable research and development work has to be done before the remaining basic problems in reflected-power communication are solved, and before the field of useful applications is explored."

Mario Cardullo's U.S. Patent 3,713,148 in 1973 was the first true ancestor of modern RFID; a passive radio transponder with memory. The initial device was passive, powered by the interrogating signal, and was demonstrated in 1971 to the New York Port Authority and other potential users and consisted of a transponder with 16 bit memory for use as a toll device. The basic Cardullo patent covers the use of RF, sound and light as transmission medium. The original business plan presented to investors in 1969 showed uses in transportation (automotive vehicle identification, automatic toll system, electronic license plate, electronic manifest, vehicle routing, vehicle performance monitoring), banking (electronic check book, electronic credit card), security (personnel identification, automatic gates, surveillance) and medical (identification, patient history).

A very early demonstration of reflected power (modulated backscatter) RFID tags, both passive and active, was done by Steven Depp, Alfred Koelle and Robert Freyman at the Los Alamos Scientific Laboratory in 1973. The portable system operated at 915 MHz and used 12 bit tags. This technique is used by the majority of today's UHF and microwave RFID tags.

The first patent to be associated with the abbreviation RFID was granted to Charles Walton in 1983 (U.S. Patent 4,384,288).

RFID tags

-------------------------------------------------

RFID tags come in three general varieties:passive, active", or semi-passive (also known as battery-assisted). Passive tags require no internal power source, whereas semi-passive and active tags require a power source, usually a small battery.

Passive

Passive RFID tags have no internal power supply. The minute electrical current induced in the antenna by the incoming radio frequency signal provides just enough power for the CMOS integrated circuit in the tag to power up and transmit a response. Most passive tags signal by backscattering the carrier wave from the reader. This means that the antenna has to be designed to both collect power from the incoming signal and also to transmit the outbound backscatter signal. The response of a passive RFID tag is not necessarily just an ID number; the tag chip can contain non-volatile EEPROM for storing data.

Passive tags have practical read distances ranging from about 10 cm (4 in.) (ISO 14443) up to a few meters (Electronic Product Code (EPC) and ISO 18000-6), depending on the chosen radio frequency and antenna design/size. Due to their simplicity in design they are also suitable for manufacture with a printing process for the antennas. The lack of an onboard power supply means that the device can be quite small: commercially available products exist that can be embedded in a sticker, or under the skin in the case of low frequency RFID tags.

In 2006, Hitachi, Ltd. developed a passive device called the µ-Chip measuring 0.15×0.15 mm (not including the antenna), and thinner than a sheet of paper (7.5 micrometers). Silicon-on-Insulator (SOI) technology is used to achieve this level of integration. The Hitachi µ-Chip can wirelessly transmit a 128-bit unique ID number which is hard coded into the chip as part of the manufacturing process. The unique ID in the chip cannot be altered, providing a high level of authenticity to the chip and ultimately to the items the chip may be permanently attached or embedded into. The Hitachi µ-Chip has a typical maximum read range of 30 cm (1 foot). In February 2007 Hitachi unveiled an even smaller RFID device measuring 0.05×0.05 mm, and thin enough to be embedded in a sheet of paper. The new chips can store as much data as the older µ-chips, and the data contained on them can be extracted from as far away as a few hundred metres. The ongoing problem with all RFIDs is that they need an external antenna which is 80 times bigger than the chip in the best version thus far developed.

Alien Technology's Fluidic Self Assembly, SmartCode's Flexible Area Synchronized Transfer (FAST)and Symbol Technologies' PICA process are alleged to potentially further reduce tag costs by massively parallel production. Alien Technology and SmartCode are currently using the processes to manufacture tags while Symbol Technologies' PICA process is still in the development phase. Alternative methods of production such as FAST, FSA and PICA could potentially reduce tag costs dramatically, and due to volume capacities achievable, in turn be able to also drive the economies of scale models for various Silicon fabricators as well. Some passive RFID vendors believe that Industry benchmarks for tag costs can be achieved eventually as new low cost volume production systems are implemented more broadly.

Non-silicon tags made from polymer semiconductors are currently being developed by several companies globally. Simple laboratory printed polymer tags operating at 13.56 MHz were demonstrated in 2005 by both PolyIC (Germany) and Philips (The Netherlands). If successfully commercialized, polymer tags will be roll-printable, like a magazine, and much less expensive than silicon-based tags. The end game for most item-level tagging over the next few decades may be that RFID tags will be wholly printed – the same way a barcode is today – and be virtually free, like a barcode. However, substantial technical and economic hurdles must be surmounted to accomplish such an end: hundreds of billions of dollars have been invested over the last three decades in silicon processing, resulting in a per-feature cost which is actually less than that of conventional printing.

Active

Unlike passive RFID tags, active RFID tags have their own internal power source, which is used to power the integrated circuits and broadcast the signal to the reader. Active tags are typically much more reliable (e.g. fewer errors) than passive tags due to the ability for active tags to conduct a "session" with a reader. Active tags, due to their onboard power supply, also transmit at higher power levels than passive tags, allowing them to be more effective in "RF challenged" environments like water (including humans/cattle, which are mostly water), metal (shipping containers, vehicles), or at longer distances. Many active tags today have practical ranges of hundreds of meters, and a battery life of up to 10 years. Some active RFID tags include sensors such as temperature logging which have been used to monitor the temperature of perishable goods like fresh produce or certain pharmaceutical products. Other sensors that have been married with active RFID include humidity, shock/vibration, light, radiation, temperature, and atmospherics like ethylene. Active tags typically have much longer range (approximately 500 m/1500 feet) and larger memories than passive tags, as well as the ability to store additional information sent by the transceiver. The United States Department of Defense has successfully used active tags to reduce logistics costs and improve supply chain visibility for more than 15 years.

Semi-passive

Semi-passive tags are similar to active tags as they have their own power source, but the battery is used just to power the microchip and not broadcast a signal. The RF energy is reflected back to the reader like a passive tag.

Antenna types

The antenna used for an RFID tag is affected by the intended application and the frequency of operation. Low-frequency (LF) passive tags are normally inductively coupled, and because the voltage induced is proportional to frequency, many coil turns are needed to produce enough voltage to operate an integrated circuit. Compact LF tags, like glass-encapsulated tags used in animal and human identification, use a multilayer coil (3 layers of 100–150 turns each) wrapped around a ferrite core.

At 13.56 MHz (High frequency or HF), a planar spiral with 5–7 turns over a credit-card-sized form factor can be used to provide ranges of tens of centimeters. These coils are less costly to produce than LF coils, since they can be made using lithographic techniques rather than by wire winding, but two metal layers and an insulator layer are needed to allow for the crossover connection from the outermost layer to the inside of the spiral where the integrated circuit and resonance capacitor are located.

Ultra-high frequency (UHF) and microwave passive tags are usually radiatively-coupled to the reader antenna and can employ conventional dipole-like antennas. Only one metal layer is required, reducing cost of manufacturing. Dipole antennas, however, are a poor match to the high and slightly capacitive input impedance of a typical integrated circuit. Folded dipoles, or short loops acting as inductive matching structures, are often employed to improve power delivery to the IC. Half-wave dipoles (16 cm at 900 mHz) are too big for many applications; for example, tags embedded in labels must be less than 100 mm (4 inches) in extent. To reduce the length of the antenna, antennas can be bent or meandered, and capacitive tip-loading or bowtie-like broadband structures are also used. Compact antennas usually have gain less than that of a dipole — that is, less than 2 dBi — and can be regarded as isotropic in the plane perpendicular to their axis.

Dipoles couple to radiation polarized along their axes, so the visibility of a tag with a simple dipole-like antenna is orientation-dependent. Tags with two orthogonal or nearly-orthogonal antennas, often known as dual-dipole tags, are much less dependent on orientation and polarization of the reader antenna, but are larger and more expensive than single-dipole tags.

Patch antennas are used to provide service in close proximity to metal surfaces, but a structure with good bandwidth is 3–6 mm thick, and the need to provide a ground layer and ground connection increases cost relative to simpler single-layer structures.

HF and UHF tag antennas are usually fabricated from copper or aluminum. Conductive inks have seen some use in tag antennas but have encountered problems with IC adhesion and environmental stability.

Tag Attachment

Basically, there are three different kinds of RFID tags based on their attachment with identified objects, i.e. attachable, implantable and insertion tags. In addition to these conventional RFID tags, Eastman Kodak Company has filed two patent applications for monitoring ingestion of medicine comprises forming a digestible RFID tag.

Tagging Positions

RFID tagging positions can influence the performance of air interface UHF RFID passive tags and related to the position where RFID tags are embedded, attached, injected or digested.

In many cases, optimum power from RFID reader is not required to operate passive tags. However, in cases where the Effective Radiated Power (ERP) level and distance between reader and tags are fixed, such as in manufacturing setting, it is important to know the location in a tagged object where a passive tag can operate optimally.

R-Spot or Resonance Spot, L-Spot or Live Spot and D-Spot or Dead Spot are defined to specify the location of RFID tags in a tagged object, where the tags can still receive power from a reader within specified ERP level and distance.

From Wikipedia, the free encyclopedia

Posted by

pkm

at

5:40 AM

0

comments

![]()

Labels: innovation, technology

Thursday, August 23, 2007

Grid Computing

From Wikipedia, the free encyclopedia

Grid computing is a phrase in distributed computing which can have several meanings:

-A local computer cluster which is like a "grid" because it is composed of multiple nodes.

-Offering online computation or storage as a metered commercial service, known as utility computing, computing on demand, or cloud computing.

-The creation of a "virtual supercomputer" by using spare computing resources within an organization.

-The creation of a "virtual supercomputer" by using a network of geographically dispersed computers. Volunteer computing, which generally focuses on scientific, mathematical, and academic problems, is the most common application of this technology.

These varying definitions cover the spectrum of "distributed computing", and sometimes the two terms are used as synonyms. This article focuses on distributed computing technologies which are not in the traditional dedicated clusters; otherwise, see computer cluster.

Functionally, one can also speak of several types of grids:

-Computational grids (including CPU Scavenging grids) which focuses primarily on computationally-intensive operations.

-Data grids or the controlled sharing and management of large amounts of distributed data.

-Equipment grids which have a primary piece of equipment e.g. a telescope, and where the surrounding Grid is used to control the equipment remotely and to analyze the data produced.

Grids versus conventional supercomputers

----------------------------------------------------------------

"Distributed" or "grid computing" in general is a special type of parallel computing which relies on complete computers (with onboard CPU, storage, power supply, network interface, etc.) connected to a network (private, public or the Internet) by a conventional network interface, such as Ethernet. This is in contrast to the traditional notion of a supercomputer, which has many CPUs connected by a local high-speed computer bus.

The primary advantage of distributed computing is that each node can be purchased as commodity hardware, which when combined can produce similar computing resources to a many-CPU supercomputer, but at lower cost. This is due to the economies of scale of producing commodity hardware, compared to the lower efficiency of designing and constructing a small number of custom supercomputers. The primary performance disadvantage is that the various CPUs and local storage areas do not have high-speed connections. This arrangement is thus well-suited to applications where multiple parallel computations can take place independently, without the need to communicate intermediate results between CPUs.

The high-end scalability of geographically dispersed grids is generally favorable, due to the low need for connectivity between nodes relative to the capacity of the public Internet. Conventional supercomputers also create physical challenges in supplying sufficient electricity and cooling capacity in a single location. Both supercomputers and grids can be used to run multiple parallel computations at the same time, which might be different simulations for the same project, or computations for completely different applications. The infrastructure and programming considerations needed to do this on each type of platform are different, however.

There are also differences in programming and deployment. It can be costly and difficult to write programs so that they can be run in the environment of a supercomputer, which may have a custom operating system, or require the program to address concurrency issues. If a problem can be adequately parallelized, a "thin" layer of "grid" infrastructure can cause conventional, standalone programs to run on multiple machines (but each given a different part of the same problem). This makes it possible to write and debug programs on a single conventional machine, and eliminates complications due to multiple instances of the same program running in the same shared memory and storage space at the same time.

Design considerations and variations

----------------------------------------------------------------

One feature of distributed grids is that they can be formed from computing resources belonging to multiple individuals or organizations (known as multiple administrative domains). This can facilitate commercial transactions, as in utility computing, or make it easier to assemble volunteer computing networks.

One disadvantage of this feature is that the computers which are actually performing the calculations might not be entirely trustworthy. The designers of the system must thus introduce measures to prevent malfunctions or malicious participants from producing false, misleading, or erroneous results, and from using the system as an attack vector. This often involves assigning work randomly to different nodes (presumably with different owners) and checking that at least two different nodes report the same answer for a given work unit. Discrepancies would identify malfunctioning and malicious nodes.

Due to the lack of central control over the hardware, there is no way to guarantee that nodes will not drop out of the network at random times. Some nodes (like laptops or dialup Internet customers) may also be available for computation but not network communications for unpredictable periods. These variations can be accommodated by assigning large work units (thus reducing the need for continuous network connectivity) and reassigning work units when a given node fails to report its results as expected.

The impacts of trust and availability on performance and development difficulty can influence the choice of whether to deploy onto a dedicated computer cluster, to idle machines internal to the developing organization, or to an open external network of volunteers or contractors.

In many cases, the participating nodes must trust the central system not to abuse the access that is being granted, by interfering with the operation of other programs, mangling stored information, transmitting private data, or creating new security holes. Other systems employ measures to reduce the amount of trust "client" nodes must place in the central system such as placing applications in virtual machines.

Public systems or those crossing administrative domains (including different departments in the same organization) often result in the need to run on heterogeneous systems, using different operating systems and hardware architectures. With many languages, there is a tradeoff between investment in software development and the number of platforms that can be supported (and thus the size of the resulting network). Cross-platform languages can reduce the need to make this tradeoff, though potentially at the expense of high performance on any given node (due to run-time interpretation or lack of optimization for the particular platform).

Various middleware projects have created generic infrastructure, to allow various scientific and commercial projects to harness a particular associated grid, or for the purpose of setting up new grids. BOINC is a common one for academic projects seeking public volunteers; more are listed at the end of the article.

CPU scavenging

----------------------------------------------------------------

CPU-scavenging, cycle-scavenging, cycle stealing, or shared computing creates a "grid" from the unused resources in a network of participants (whether worldwide or internal to an organization). Usually this technique is used to make use of instruction cycles on desktop computers that would otherwise be wasted at night, during lunch, or even in the scattered seconds throughout the day when the computer is waiting for user input or slow devices.

Volunteer computing projects use the CPU scavenging model almost exclusively.

In practice, participating computers also donate some supporting amount of disk storage space, RAM, and network bandwidth, in addition to raw CPU power. Nodes in this model are also more vulnerable to going "offline" in one way or another from time to time, as their owners use their resources for their primary purpose.

Current projects and applications

----------------------------------------------------------------

Grids offer a way to solve Grand Challenge problems like protein folding, financial modeling, earthquake simulation, and climate/weather modeling. Grids offer a way of using the information technology resources optimally inside an organization. They also provide a means for offering information technology as a utility for commercial and non-commercial clients, with those clients paying only for what they use, as with electricity or water.

Grid computing is presently being applied successfully by the National Science Foundation's National Technology Grid, NASA's Information Power Grid, Pratt & Whitney, Bristol-Myers Squibb, Co., and American Express.

One of the most famous cycle-scavenging networks is SETI@home, which was using more than 3 million computers to achieve 23.37 sustained teraflops (979 lifetime teraflops) as of September 2001.

As of May 2005, Folding@home had achieved peaks of 186 teraflops on over 160,000 machines.

Another well-known project is distributed.net, which was started in 1997 and has run a number of successful projects in its history.

The NASA Advanced Supercomputing facility (NAS) has run genetic algorithms using the Condor cycle scavenger running on about 350 Sun and SGI workstations.

United Devices operates the United Devices Cancer Research Project based on its Grid MP product, which cycle scavenges on volunteer PCs connected to the Internet. As of June 2005, the Grid MP ran on about 3,100,000 machines.

The Enabling Grids for E-sciencE project, which is based in the European Union and includes sites in Asia and the United States, is a follow up project to the European DataGrid (EDG) and is arguably the largest computing grid on the planet. This, along with the LHC Computing Grid (LCG) have been developed to support the experiments using the CERN Large Hadron Collider. The LCG project is driven by CERN's need to handle huge amounts of data, where storage rates of several gigabytes per second (10 petabytes per year) are required. A list of active sites participating within LCG can be found online as can real time monitoring of the EGEE infrastructure. The relevant software and documentation is also publicly accessible .

Posted by

pkm

at

1:45 AM

0

comments

![]()

Labels: computer, innovation, technology

Saturday, August 18, 2007

DVB-H

From Wikipedia, the free encyclopedia

DVB-H (Digital Video Broadcasting - Handheld) is a technical specification for bringing broadcast services to handheld receivers. DVB-H was formally adopted as ETSI standard EN 302 304 in November 2004. The DVB-H specification (EN 302 304) can be downloaded from the official DVB-H website. DVB-H is officially endorsed by the European Union.The major competitor of this technology is Digital Multimedia Broadcasting (DMB).

Technical explanation

---------------------------------------------------------------

It is the latest development within the set of DVB transmission standards. DVB-H technology is a superset of the very successful DVB-T (Digital Video Broadcasting - Terrestrial) system for digital terrestrial television, with additional features to meet the specific requirements of handheld, battery-powered receivers.

DVB-H can offer a downstream channel at high data rates which can be used as standalone or as an enhancement of mobile telecommunication networks which many typical handheld terminals are able to access anyway.

Time slicing technology is employed to reduce power consumption for small handheld terminals. IP datagrams are transmitted as data bursts in small time slots. Each burst may contain up to 2 Mbits of data (including parity bits). There are 64 parity bits for each 191 data bits, protected by Reed-Solomon codes. The front end of the receiver switches on only for the time interval when the data burst of a selected service is on air. Within this short period of time a high data rate is received which can be stored in a buffer. This buffer can either store the downloaded applications or playout live streams.